Difference between revisions of "The Data Processing Inequality"

| (60 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| + | == Entropy Chain Rule == | ||

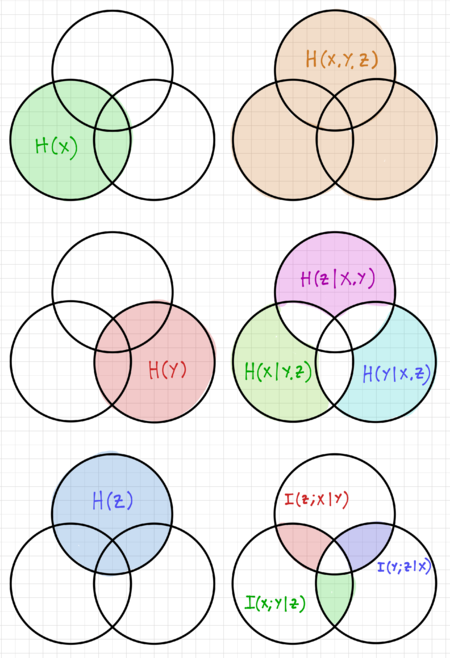

| + | [[File:Entropy xy venn.png|thumb|400px|Figure 1: Entropy visualization for two random variables using Venn diagrams.]] | ||

| + | As we increase the number of random variables we are dealing with, it is important to understand how this increase affects entropy. We have previously shown that for two random variables <math>X</math> and <math>Y</math>: | ||

| + | |||

| + | {{NumBlk|::|<math>\begin{align} | ||

| + | H\left(X, Y\right) & = H\left(X\right) + H\left(Y \mid X\right) \\ | ||

| + | & = H\left(Y\right) + H\left(X \mid Y\right) \\ | ||

| + | & = H\left(Y, X\right) | ||

| + | \end{align}</math>|{{EquationRef|1}}}} | ||

| + | |||

| + | We can use Venn diagrams to visualize these relationships, as seen in Fig. 1. For three random variables <math>X</math>, <math>Y</math>, and <math>Z</math>: | ||

| + | |||

| + | {{NumBlk|::|<math>\begin{align} | ||

| + | H\left(X, Y, Z\right) & = H\left(X\right) + H\left(Y, Z\mid X\right) \\ | ||

| + | & = H\left(X\right) + H\left(Y \mid X\right) + H\left(Z \mid Y, X\right)\\ | ||

| + | \end{align}</math>|{{EquationRef|2}}}} | ||

| + | |||

| + | In general: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(X_1, X_2, \ldots, X_n\right) = \sum_{i=1}^n H\left(X_i \mid X_{i-1}, X_{i-2},\ldots, X_1\right)</math>|{{EquationRef|3}}}} | ||

| + | |||

| + | == Conditional Mutual Information == | ||

| + | Conditional mutual information is defined as the expected value of the mutual information of two random variables given the value of a third random variable, and for three random variables <math>X</math>, <math>Y</math>, and <math>Z</math>, it is defined as: | ||

| + | |||

| + | {{NumBlk|::|<math>\begin{align} | ||

| + | I\left(X;Y\mid Z\right) & = \sum_{z\in Z} P\left(z\right) \sum_{y\in Y} \sum_{x\in X} P\left(x,y\mid z\right)\cdot \log_2\frac{P\left(x,y\mid z\right)}{P\left(x\mid z\right)\cdot P\left(y\mid z\right)} \\ | ||

| + | & = \sum_{z\in Z} \sum_{y\in Y} \sum_{x\in X} P\left(x, y, z\right)\cdot\log_2 \frac{P\left(x, y, z\right)\cdot P\left(z\right)}{P\left(x, z\right)\cdot P\left(y, z\right)} \\ | ||

| + | \end{align}</math>|{{EquationRef|4}}}} | ||

| + | |||

| + | We can rewrite the definition of conditional mutual information as: | ||

| + | |||

| + | {{NumBlk|::|<math>\begin{align} | ||

| + | I\left(X;Y\mid Z\right) & = \sum_{z\in Z} \sum_{y\in Y} \sum_{x\in X} P\left(x, y, z\right)\cdot\log_2 \frac{P\left(x, y, z\right)\cdot P\left(z\right)}{P\left(x, z\right)\cdot P\left(y, z\right)} \\ | ||

| + | & = \sum_{z\in Z} \sum_{y\in Y} \sum_{x\in X} P\left(x, y, z\right)\cdot \left(\log_2\frac{P\left(z\right)}{P\left(x, z\right)}-\log_2\frac{P\left(y, z\right)}{P\left(x, y, z\right)}\right) \\ | ||

| + | & = \sum_{z\in Z} \sum_{y\in Y} \sum_{x\in X} P\left(x, y, z\right)\cdot \log_2\frac{P\left(z\right)}{P\left(x, z\right)} - \sum_{z\in Z} \sum_{y\in Y} \sum_{x\in X} P\left(x, y, z\right)\cdot \log_2\frac{P\left(y, z\right)}{P\left(x, y, z\right)} \\ | ||

| + | & = \sum_{y\in Y} P\left(y\right) \sum_{z\in Z} \sum_{x\in X} P\left(x, z\mid y\right)\cdot \log_2\frac{P\left(z\right)}{P\left(x, z\right)} - \sum_{z\in Z} \sum_{y\in Y} \sum_{x\in X} P\left(x, y, z\right)\cdot \log_2\frac{P\left(y, z\right)}{P\left(x, y, z\right)} \\ | ||

| + | & = \sum_{z\in Z} \sum_{x\in X} P\left(x, z\right)\cdot \log_2\frac{P\left(z\right)}{P\left(x, z\right)} - \sum_{z\in Z} \sum_{y\in Y} \sum_{x\in X} P\left(x, y, z\right)\cdot \log_2\frac{P\left(y, z\right)}{P\left(x, y, z\right)} \\ | ||

| + | & = H\left(X\mid Z\right) - H\left(X\mid Y, Z\right)\\ | ||

| + | \end{align}</math>|{{EquationRef|5}}}} | ||

| + | |||

| + | [[File:Entropy xyz venn.png|thumb|450px|Figure 2: Entropy visualization for three random variables using Venn diagrams.]] | ||

| + | We can visualize this relationship using the Venn diagrams in Fig. 2. Compare this to our expression for the mutual information of two random variables <math>X</math> and <math>Y</math>: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(X;Y\right) = H\left(X\right) - H\left(X\mid Y\right)</math>|{{EquationRef|6}}}} | ||

| + | |||

| + | === Chain Rule for Mutual Information === | ||

| + | For random variables <math>X</math> and <math>Z</math>: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(X;Z\right) = H\left(X\right) - H\left(X\mid Z\right)</math>|{{EquationRef|7}}}} | ||

| + | |||

| + | And for random variables <math>X</math>, <math>Y</math> and <math>Z</math>: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(X;Y,Z\right) = H\left(X\right) - H\left(X\mid Y,Z\right)</math>|{{EquationRef|8}}}} | ||

| + | |||

| + | We can then express the conditional mutual information as: | ||

| + | |||

| + | {{NumBlk|::|<math>\begin{align} | ||

| + | I\left(X;Y\mid Z\right) & = H\left(X\mid Z\right) - H\left(X\mid Y,Z\right) \\ | ||

| + | & = H\left(X\right) - I\left(X;Z\right) + I\left(X;Y,Z\right) - H\left(X\right) \\ | ||

| + | & = I\left(X;Y,Z\right) - I\left(X;Z\right) \\ | ||

| + | \end{align}</math>|{{EquationRef|9}}}} | ||

| + | |||

| + | Rearranging, we then obtain the chain rule for mutual information: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(X;Y,Z\right) = I\left(X;Y\mid Z\right) + I\left(X;Z\right)</math>|{{EquationRef|10}}}} | ||

| + | |||

| + | Thus, we can extend this for additional random variables: | ||

| + | |||

| + | {{NumBlk|::|<math>\begin{align} | ||

| + | I\left(Y;X_1,X_2,X_3\right) & = I\left(Y;X_3\mid X_2,X_1\right) + I\left(Y;X_2,X_1\right) \\ | ||

| + | & = I\left(Y;X_3\mid X_2,X_1\right) + I\left(Y;X_2\mid X_1\right) + I\left(Y;X_1\right)\\ | ||

| + | \end{align}</math>|{{EquationRef|11}}}} | ||

| + | |||

| + | In general: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(Y;X_1, X_2,\ldots ,X_n\right) = \sum_{i=1}^n I\left(Y;X_i\mid X_{i-1}, X_{i-2},\dots ,X_1\right)</math>|{{EquationRef|12}}}} | ||

| + | |||

== Markovity == | == Markovity == | ||

A [https://en.wikipedia.org/wiki/Markov_chain Markov Chain] is a random process that describes a sequence of possible events where the probability of each event depends only on the outcome of the previous event. Thus, we say that <math>X, Y, Z</math> is a Markov chain in this order, denoted as: | A [https://en.wikipedia.org/wiki/Markov_chain Markov Chain] is a random process that describes a sequence of possible events where the probability of each event depends only on the outcome of the previous event. Thus, we say that <math>X, Y, Z</math> is a Markov chain in this order, denoted as: | ||

| − | {{NumBlk|::|<math>X \rightarrow Y \rightarrow Z</math>|{{EquationRef| | + | {{NumBlk|::|<math>X \rightarrow Y \rightarrow Z</math>|{{EquationRef|13}}}} |

If we can write: | If we can write: | ||

| − | {{NumBlk|::|<math>P\left(X=x, Y=y, Z=z\right) = P\left(Z=z\mid Y=y\right)\cdot P\left(Y=y\mid X=x\right) \cdot P\left(X=x\right)</math>|{{EquationRef| | + | {{NumBlk|::|<math>P\left(X=x, Y=y, Z=z\right) = P\left(Z=z\mid Y=y\right)\cdot P\left(Y=y\mid X=x\right) \cdot P\left(X=x\right)</math>|{{EquationRef|14}}}} |

Or in a more compact form: | Or in a more compact form: | ||

| − | {{NumBlk|::|<math>P\left(x, y, z\right) = P\left(z\mid y\right)\cdot P\left(y\mid x\right) \cdot P\left(x\right)</math>|{{EquationRef| | + | {{NumBlk|::|<math>P\left(x, y, z\right) = P\left(z\mid y\right)\cdot P\left(y\mid x\right) \cdot P\left(x\right)</math>|{{EquationRef|15}}}} |

We can use Markov chains to model how a signal is corrupted when passed through noisy channels. For example, if <math>X</math> is a binary signal, it can change with a certain probability, <math>p</math>, to <math>Y</math>, and it can again be corrupted to produce <math>Z</math>. | We can use Markov chains to model how a signal is corrupted when passed through noisy channels. For example, if <math>X</math> is a binary signal, it can change with a certain probability, <math>p</math>, to <math>Y</math>, and it can again be corrupted to produce <math>Z</math>. | ||

| Line 16: | Line 93: | ||

Consider the joint probability <math>P\left(x, z\mid y\right)</math>. We can express this as: | Consider the joint probability <math>P\left(x, z\mid y\right)</math>. We can express this as: | ||

| − | {{NumBlk|::|<math>P\left(x, z\mid y\right) = \frac{P\left(x, y, z\right)}{P\left(y\right)}</math>|{{EquationRef| | + | {{NumBlk|::|<math>P\left(x, z\mid y\right) = \frac{P\left(x, y, z\right)}{P\left(y\right)}</math>|{{EquationRef|16}}}} |

And if <math>X \rightarrow Y \rightarrow Z</math>, we get: | And if <math>X \rightarrow Y \rightarrow Z</math>, we get: | ||

| − | {{NumBlk|::|<math>P\left(x, z\mid y\right) = \frac{P\left(z\mid y\right)\cdot P\left(y\mid x\right) \cdot P\left(x\right)}{P\left(y\right)}</math>|{{EquationRef| | + | {{NumBlk|::|<math>P\left(x, z\mid y\right) = \frac{P\left(z\mid y\right)\cdot P\left(y\mid x\right) \cdot P\left(x\right)}{P\left(y\right)}</math>|{{EquationRef|17}}}} |

Since <math>P\left(y,x\right)=P\left(y\mid x\right)\cdot P\left(x\right) = P\left(x\mid y\right)\cdot P\left(y\right)</math>, we can write: | Since <math>P\left(y,x\right)=P\left(y\mid x\right)\cdot P\left(x\right) = P\left(x\mid y\right)\cdot P\left(y\right)</math>, we can write: | ||

| − | {{NumBlk|::|<math>P\left(x, z\mid y\right) = \frac{P\left(z\mid y\right)\cdot P\left(y, x\right)}{P\left(y\right)}=P\left(z\mid y\right)\cdot P\left(x\mid y\right)</math>|{{EquationRef| | + | {{NumBlk|::|<math>P\left(x, z\mid y\right) = \frac{P\left(z\mid y\right)\cdot P\left(y, x\right)}{P\left(y\right)}=P\left(z\mid y\right)\cdot P\left(x\mid y\right)</math>|{{EquationRef|18}}}} |

Thus, we can say that <math>X</math> and <math>Z</math> are conditionally independent given <math>Y</math>. If we think of <math>X</math> as some past event, and <math>Z</math> as some future event, then the past and future events are independent if we know the present event <math>Y</math>. Note that this property is good definition of, as well as a useful tool for checking Markovity. | Thus, we can say that <math>X</math> and <math>Z</math> are conditionally independent given <math>Y</math>. If we think of <math>X</math> as some past event, and <math>Z</math> as some future event, then the past and future events are independent if we know the present event <math>Y</math>. Note that this property is good definition of, as well as a useful tool for checking Markovity. | ||

| Line 36: | Line 113: | ||

& = P\left(z, y\right)\cdot P\left(x\mid y\right)\\ | & = P\left(z, y\right)\cdot P\left(x\mid y\right)\\ | ||

& = P\left(x\mid y\right) \cdot P\left(y\mid z\right) \cdot P\left(z\right)\\ | & = P\left(x\mid y\right) \cdot P\left(y\mid z\right) \cdot P\left(z\right)\\ | ||

| − | \end{align}</math>|{{EquationRef| | + | \end{align}</math>|{{EquationRef|19}}}} |

Therefore, if <math>X \rightarrow Y \rightarrow Z</math>, then it follows that <math>Z \rightarrow Y \rightarrow X</math>. | Therefore, if <math>X \rightarrow Y \rightarrow Z</math>, then it follows that <math>Z \rightarrow Y \rightarrow X</math>. | ||

| − | == | + | == The Data Processing Inequality == |

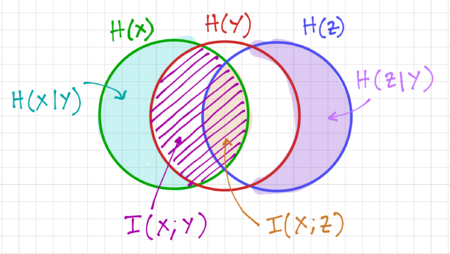

| − | + | [[File:Mutual Info Markov XYZ.png|thumb|450px|Figure 3: Venn diagram visualization of mutual information in a Markov chain.]] | |

| + | Consider three random variables, <math>X</math>, <math>Y</math>, and <math>Z</math>. The mutual information <math>I\left(X; Y,Z\right)</math> can be expressed as: | ||

{{NumBlk|::|<math>\begin{align} | {{NumBlk|::|<math>\begin{align} | ||

| − | + | I\left(X;Y,Z\right) & = I\left(X;Y\mid Z\right) + I\left(X;Z\right) \\ | |

| − | & = | + | & = I\left(X;Z\mid Y\right) + I\left(X;Y\right) \\ |

| − | + | \end{align}</math>|{{EquationRef|20}}}} | |

| − | \end{align}</math>|{{EquationRef| | ||

| − | + | If <math>X \rightarrow Y \rightarrow Z</math>, i.e. <math>X</math>, <math>Y</math>, and <math>Z</math> form a Markov chain, then <math>X</math> is conditionally independent of <math>Z</math> given <math>Y</math>, resulting in <math>I\left(X;Z\mid Y\right) = 0</math>. Thus, | |

{{NumBlk|::|<math>\begin{align} | {{NumBlk|::|<math>\begin{align} | ||

| − | + | I\left(X;Y\mid Z\right) + I\left(X;Z\right) & = I\left(X;Z\mid Y\right) + I\left(X;Y\right)\\ | |

| − | + | I\left(X;Y\mid Z\right) + I\left(X;Z\right) & = I\left(X;Y\right)\\ | |

| − | \end{ | + | \end{align}</math>|{{EquationRef|21}}}} |

| + | |||

| + | And since <math>I\left(X;Y\mid Z\right) \ge 0</math>, we get the expression known as the ''Data Processing Inequality'': | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(X;Z\right) \le I\left(X;Y\right) </math>|{{EquationRef|21}}}} | ||

| + | |||

| + | And since <math>Z \rightarrow Y \rightarrow X</math> is also a Markov chain, then: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(Z;X\right) \le I\left(Z;Y\right) </math>|{{EquationRef|22}}}} | ||

| + | |||

| + | We can visualize this relation using the Venn diagrams in Fig. 3. Note that the equality occurs when <math>I\left(X;Y\mid Z\right) = 0</math>. It should also be evident that <math>X\mid Y</math> is independent of <math>Z\mid Y</math> given <math>Y</math>, and that <math>I\left(X;Z\mid Y\right) = 0</math>. | ||

| + | |||

| + | Essentially, the data processing inequality implies that no amount of processing or clever manipulation of data can improve inference. Stated in another way, no clever transformation of the received code <math>Y</math> can give more information about the sent code <math>X</math>. This implies that: | ||

| + | * We will loose information when we process information (downside). | ||

| + | * In some cases, the equality still holds even if we discard something (upside). This motivates our exploration of the concept of sufficient statistics. | ||

| + | |||

| + | === Sufficient Statistics === | ||

| + | Given a family of distributions <math>\{f_\theta\left(x\right)\}</math> indexed by a parameter <math>\theta</math>. If <math>X</math> is a sample from <math>f_\theta</math>, and <math>T\left(X\right)</math> is any statistic, then we get the Markov chain: | ||

| + | |||

| + | {{NumBlk|::|<math>\theta \rightarrow X \rightarrow T\left(X\right)</math>|{{EquationRef|23}}}} | ||

| + | |||

| + | From the data processing inequality, we get: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(\theta; T\left(X\right)\right) \leq I\left(\theta; X\right)</math>|{{EquationRef|24}}}} | ||

| + | |||

| + | We say that a statistic is sufficient for <math>\theta</math> if it has all the information contained in <math>X</math> about <math>\theta</math>. Mathematically, this means: | ||

| + | |||

| + | {{NumBlk|::|<math>I\left(\theta; T\left(X\right)\right) = I\left(\theta; X\right)</math>|{{EquationRef|25}}}} | ||

| + | |||

| + | Or equivalently: | ||

| + | |||

| + | {{NumBlk|::|<math>\theta \rightarrow T\left(X\right) \rightarrow X</math>|{{EquationRef|26}}}} | ||

| + | |||

| + | That is, once we know <math>T\left(X\right)</math>, the remaining randomness in <math>X</math> does not depend on <math>\theta</math>. | ||

| + | |||

| + | == Fano's Inequality == | ||

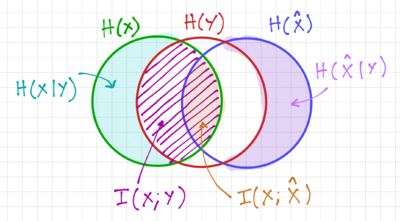

| + | Consider a communication system, where we transmit <math>X</math>, and receive the corrupted version <math>Y</math>. If we try to infer <math>X</math> from <math>Y</math>, there is always a possibility that we will make a mistake. If <math>\hat{X}</math> is our estimate of <math>X</math>, then <math>X\rightarrow Y\rightarrow \hat{X}</math> is a Markov chain. We then define the probability of error as: | ||

| + | |||

| + | {{NumBlk|::|<math>P_e = P\left(\hat{X} \neq X\right)</math>|{{EquationRef|27}}}} | ||

| + | |||

| + | Let us define the error random variable, <math>E</math>, as: | ||

| + | |||

| + | {{NumBlk|::|<math>E= | ||

| + | \begin{cases} | ||

| + | 1, & \mathrm{if}\,\hat{X} \neq X\\ | ||

| + | 0, & \mathrm{if}\,\hat{X} = X\\ | ||

| + | \end{cases}</math>|{{EquationRef|28}}}} | ||

| − | + | We then get <math>H\left(E\right)=H\left(P_e\right)</math>. Using the entropy chain rule: | |

| − | {{NumBlk|::|<math>H\left( | + | {{NumBlk|::|<math>\begin{align} |

| + | H\left(X, Y\right) | ||

| + | & = H\left(X\right) + H\left(Y \mid X\right) \\ | ||

| + | & = H\left(Y\right) + H\left(X \mid Y\right) \\ | ||

| + | \end{align}</math>|{{EquationRef|29}}}} | ||

| − | + | Then the conditional entropy <math>H\left(E, X\mid \hat{X}\right)</math> is: | |

| − | |||

{{NumBlk|::|<math>\begin{align} | {{NumBlk|::|<math>\begin{align} | ||

| − | + | H\left(E, X\mid \hat{X}\right) | |

| − | + | & = H\left(E\mid \hat{X}\right) + H\left(X \mid E, \hat{X}\right) \\ | |

| − | + | & = H\left(X\mid \hat{X}\right) + H\left(E \mid X, \hat{X}\right) \\ | |

| + | \end{align}</math>|{{EquationRef|30}}}} | ||

| + | |||

| + | Let us look at these terms one by one. If we know <math>X</math> and <math>\hat{X}</math>, then there is no uncertainty in the value of <math>E</math>, thus: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(E \mid X, \hat{X}\right) = 0</math>|{{EquationRef|31}}}} | ||

| − | + | Recall that conditioning reduces the uncertainty: | |

{{NumBlk|::|<math>\begin{align} | {{NumBlk|::|<math>\begin{align} | ||

| − | + | H\left(E \mid \hat{X}\right) & = H\left(E\right) - I\left(E;\hat{X}\right) \\ | |

| − | & | + | & \leq H\left(E\right) \\ |

| − | + | & \leq H\left(P_e\right) \\ | |

| − | + | \end{align}</math>|{{EquationRef|32}}}} | |

| − | + | ||

| − | + | We can expand <math>H\left(X\mid E,\hat{X}\right)</math> as: | |

| − | \ | + | |

| + | {{NumBlk|::|<math>H\left(X \mid E, \hat{X}\right) = P\left(E=0\right)\cdot H\left(X \mid E=0, \hat{X}\right) + P\left(E=1\right)\cdot H\left(X \mid E=1, \hat{X}\right)</math>|{{EquationRef|33}}}} | ||

| + | |||

| + | Note that given <math>E=0</math>, i.e. there is no error, then there is no uncertainty in <math>X</math> given <math>\hat{X}</math>, giving us <math>H\left(X \mid E=0, \hat{X}\right)=0</math>. And once again, we know conditioning reduces uncertainty, giving us <math>H\left(X \mid E=1, \hat{X}\right) \leq H\left(X\right)</math>. Thus, recognizing that <math>P\left(E=0\right)=1-P_e</math> and <math>P\left(E=1\right)=P_e</math>, we get: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(X \mid E, \hat{X}\right) \leq P_e\cdot H\left(X\right)</math>|{{EquationRef|34}}}} | ||

| + | |||

| + | If both <math>X</math> and <math>\hat{X}</math> have symbols in the alphabet <math>\chi</math>, then applying the Gibbs inequality, we get <math>H\left(X\right)\leq \log_2\left|\chi\right|</math>, where <math>\left|\chi\right|</math> is the number of symbols in <math>\chi</math>. This gives us: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(X \mid E, \hat{X}\right) \leq P_e\cdot \log_2\left|\chi\right|</math>|{{EquationRef|35}}}} | ||

| − | + | Combining these individual results, we can then write Eq. 30 as: | |

| − | {{NumBlk|::|<math> | + | {{NumBlk|::|<math>\begin{align} |

| + | H\left(E\mid \hat{X}\right) + H\left(X \mid E, \hat{X}\right) & = H\left(X\mid \hat{X}\right) + H\left(E \mid X, \hat{X}\right) \\ | ||

| + | H\left(P_e\right) + P_e\cdot \log_2\left|\chi\right| & \geq H\left(X\mid \hat{X}\right) | ||

| + | \end{align}</math>|{{EquationRef|36}}}} | ||

| − | + | Since <math>X\rightarrow Y \rightarrow \hat{X}</math> is a Markov chain, as seen in Figs. 3 and 4, we get ''Fano's Inequality'': | |

| − | |||

| − | {{NumBlk|::|<math> | + | {{NumBlk|::|<math>H\left(X\mid Y\right) \leq H\left(X\mid \hat{X}\right) \leq H\left(P_e\right) + P_e\cdot \log_2\left|\chi\right| </math>|{{EquationRef|37}}}} |

| − | + | We can then express the error probability in terms of <math>H\left(X\mid Y\right)</math>: | |

| − | {{NumBlk|::|<math> | + | {{NumBlk|::|<math>P_e \geq \frac{H\left(X\mid Y\right) - H\left(P_e\right)}{\log_2\left|\chi\right|}</math>|{{EquationRef|38}}}} |

| − | + | Recall that: | |

{{NumBlk|::|<math>\begin{align} | {{NumBlk|::|<math>\begin{align} | ||

| − | + | H\left(P_e\right) & = P_e\cdot \log_2\frac{1}{P_e} + \left(1-P_e\right)\cdot \log_2\frac{1}{1-P_e}\\ | |

| − | + | & \leq 1 \\ | |

| − | + | \end{align}</math>|{{EquationRef|39}}}} | |

| − | \ | + | |

| + | [[File:Fanos Inequality.png|thumb|400px|Figure 4: A Venn diagram visualizing <math>X\rightarrow Y\rightarrow \hat{X}</math>.]] | ||

| + | We can further recognize that for <math>E=1</math>, or equivalently when <math>\hat{X}\neq X</math>, we know that <math>\hat{X}</math> is not equal to the actual value of <math>X</math>, thus reducing the possible values of <math>X</math> from <math>\left|\chi\right|</math> to <math>\left|\chi\right|-1</math>, thus: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(X\mid E=1, \hat{X}\right)\leq \log_2\left(\left|\chi\right|-1\right)</math>|{{EquationRef|40}}}} | ||

| + | |||

| + | This allows us to write Eq. 38 as: | ||

| + | |||

| + | {{NumBlk|::|<math>P_e \geq \frac{H\left(X\mid Y\right) - 1}{\log_2\left(\left|\chi\right|-1\right)}</math>|{{EquationRef|41}}}} | ||

| + | |||

| + | And since <math>H\left(X\mid Y\right)=H\left(X\right)-I\left(X;Y\right)</math>, we get: | ||

| + | |||

| + | {{NumBlk|::|<math>P_e \geq \frac{H\left(X\right) - I\left(X;Y\right) - 1}{\log_2\left(\left|\chi\right|-1\right)}</math>|{{EquationRef|42}}}} | ||

| − | = | + | Thus, Fano's inequality leads to a lower bound on the probability of error, for any decoding function <math>\hat{X}=g\left(Y\right)</math>, and it depends on the mutual information <math>I\left(X;Y\right)</math>, or how much information about <math>X</math> is contained in <math>Y</math>, and the size of the alphabet <math>\left|\chi\right|</math>. Thus, to reduce the lower bound of <math>P_e</math>, we should minimize <math>H\left(X\mid Y\right)</math>, or equivalently maximize <math>I\left(X;Y\right)</math>, as expected. |

| − | + | {{Note|161-A4.1 '''Activity A4.1''' The Data Processing Inequality and Fano's Inequality -- This activity introduces the concept of information loss and the error probabilities associated with entropy and mutual information.|reminder}} | |

| − | == | + | == Sources == |

| + | * Yao Xie's [https://www2.isye.gatech.edu/~yxie77/ece587/Lecture4.pdf lecture] on Data Processing Inequality. | ||

Latest revision as of 15:49, 9 November 2020

Contents

Entropy Chain Rule

As we increase the number of random variables we are dealing with, it is important to understand how this increase affects entropy. We have previously shown that for two random variables and :

-

(1)

-

We can use Venn diagrams to visualize these relationships, as seen in Fig. 1. For three random variables , , and :

-

(2)

-

In general:

-

(3)

-

Conditional Mutual Information

Conditional mutual information is defined as the expected value of the mutual information of two random variables given the value of a third random variable, and for three random variables , , and , it is defined as:

-

(4)

-

We can rewrite the definition of conditional mutual information as:

-

(5)

-

We can visualize this relationship using the Venn diagrams in Fig. 2. Compare this to our expression for the mutual information of two random variables and :

-

(6)

-

Chain Rule for Mutual Information

For random variables and :

-

(7)

-

And for random variables , and :

-

(8)

-

We can then express the conditional mutual information as:

-

(9)

-

Rearranging, we then obtain the chain rule for mutual information:

-

(10)

-

Thus, we can extend this for additional random variables:

-

(11)

-

In general:

-

(12)

-

Markovity

A Markov Chain is a random process that describes a sequence of possible events where the probability of each event depends only on the outcome of the previous event. Thus, we say that is a Markov chain in this order, denoted as:

-

(13)

-

If we can write:

-

(14)

-

Or in a more compact form:

-

(15)

-

We can use Markov chains to model how a signal is corrupted when passed through noisy channels. For example, if is a binary signal, it can change with a certain probability, , to , and it can again be corrupted to produce .

Consider the joint probability . We can express this as:

-

(16)

-

And if , we get:

-

(17)

-

Since , we can write:

-

(18)

-

Thus, we can say that and are conditionally independent given . If we think of as some past event, and as some future event, then the past and future events are independent if we know the present event . Note that this property is good definition of, as well as a useful tool for checking Markovity.

We can rewrite the joint probability as:

-

(19)

-

Therefore, if , then it follows that .

The Data Processing Inequality

Consider three random variables, , , and . The mutual information can be expressed as:

-

(20)

-

If , i.e. , , and form a Markov chain, then is conditionally independent of given , resulting in . Thus,

-

(21)

-

And since , we get the expression known as the Data Processing Inequality:

-

(21)

-

And since is also a Markov chain, then:

-

(22)

-

We can visualize this relation using the Venn diagrams in Fig. 3. Note that the equality occurs when . It should also be evident that is independent of given , and that .

Essentially, the data processing inequality implies that no amount of processing or clever manipulation of data can improve inference. Stated in another way, no clever transformation of the received code can give more information about the sent code . This implies that:

- We will loose information when we process information (downside).

- In some cases, the equality still holds even if we discard something (upside). This motivates our exploration of the concept of sufficient statistics.

Sufficient Statistics

Given a family of distributions indexed by a parameter . If is a sample from , and is any statistic, then we get the Markov chain:

-

(23)

-

From the data processing inequality, we get:

-

(24)

-

We say that a statistic is sufficient for if it has all the information contained in about . Mathematically, this means:

-

(25)

-

Or equivalently:

-

(26)

-

That is, once we know , the remaining randomness in does not depend on .

Fano's Inequality

Consider a communication system, where we transmit , and receive the corrupted version . If we try to infer from , there is always a possibility that we will make a mistake. If is our estimate of , then is a Markov chain. We then define the probability of error as:

-

(27)

-

Let us define the error random variable, , as:

-

(28)

-

We then get . Using the entropy chain rule:

-

(29)

-

Then the conditional entropy is:

-

(30)

-

Let us look at these terms one by one. If we know and , then there is no uncertainty in the value of , thus:

-

(31)

-

Recall that conditioning reduces the uncertainty:

-

(32)

-

We can expand as:

-

(33)

-

Note that given , i.e. there is no error, then there is no uncertainty in given , giving us . And once again, we know conditioning reduces uncertainty, giving us . Thus, recognizing that and , we get:

-

(34)

-

If both and have symbols in the alphabet , then applying the Gibbs inequality, we get , where is the number of symbols in . This gives us:

-

(35)

-

Combining these individual results, we can then write Eq. 30 as:

-

(36)

-

Since is a Markov chain, as seen in Figs. 3 and 4, we get Fano's Inequality:

-

(37)

-

We can then express the error probability in terms of :

-

(38)

-

Recall that:

-

(39)

-

We can further recognize that for , or equivalently when , we know that is not equal to the actual value of , thus reducing the possible values of from to , thus:

-

(40)

-

This allows us to write Eq. 38 as:

-

(41)

-

And since , we get:

-

(42)

-

Thus, Fano's inequality leads to a lower bound on the probability of error, for any decoding function , and it depends on the mutual information , or how much information about is contained in , and the size of the alphabet . Thus, to reduce the lower bound of , we should minimize , or equivalently maximize , as expected.

Sources

- Yao Xie's lecture on Data Processing Inequality.