CoE 197U Power and Energy

In this lecture, we will cover the following topics:

- Power and Energy

- Energy Efficient Techniques

- Metrics

Use the provided slide deck to guide you through this discussion. The main reference for this lecture is Chapter 5 of the Digital IC book[1] and Chapter 4 of the Enabling the IoT book[2].

Power

Power consumption can be classified into dynamic power and static power. Majority of the power consumption comes from the switching of signals, or what we call dynamic power. Static power comes from leakage currents, or the current through the devices when they are supposed to be OFF. Dynamic power comes from the switching of signals, or the charging and discharging of capacitive loads. It can be computed as CLVDD2f, where CL is the capacitive load being charged/discharged, VDD is the supply voltage and f is the frequency of transition. Considering that majority of the power comes from dynamic power, and based on the above equation, we can then say that reducing the supply voltage would be the best choice to reduce power (due to the square relation of power and supply voltage). Other possible ways to reduce power is by reducing switching activity (effectively f) and/or reduce capacitance (CL).

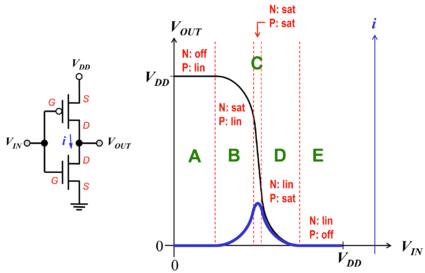

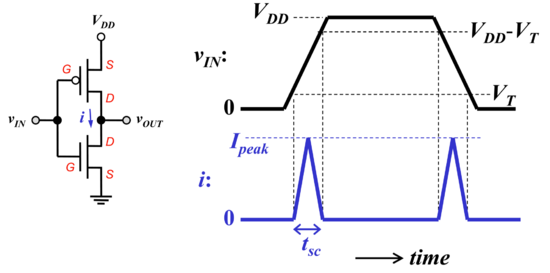

Let's take the CMOS inverter as an example. Since the CMOS inverter ideally does not draw any current when the output is at its nominal values, i.e. VDD or ground, it only consumes dynamic power, or power when the output transitions from low to high or high to low, as shown in Figs. 1 and 2. During the transition, the most current is drawn when both transistors are in the saturation region, where the NMOS and PMOS transistors are simultaneously behaving like closed switches. Thus, this current is called the short-circuit current or crowbar current, which lasts over a period called the short-circuit time, tSC.

Fig. 1: The CMOS switching current[3]. |

Fig. 2: The CMOS transient power dissipation[3]. |

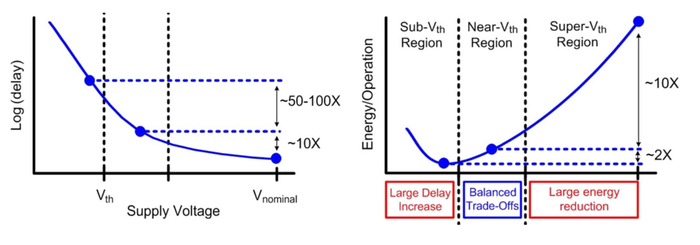

By reducing the supply voltage, the speed will also be degraded. Shown in Fig. 3 (left) is an illustration of the relationship between supply voltage and speed (delay). Note that the y-axis is in log scale and therefore a linear plot (in semilog) is what we expect. However, notice also that the rate of increase in delay (and therefore decease in speed) increases as we reduce the supply voltage. Looking at the energy per operation (right), we can see that the energy per operation is minimum at some point and starts to increase again at VDD < Vth due to the very large delay. Here we can see the advantage of lowering VDD to near threshold (otherwise called near-threshold computing, or NTC). At this voltage, energy consumption is low (though not the minimum achievable) and a slight variation in voltage may increase or decrease energy but only by a small amount.

In terms of node transition, aside from actual signal activity, signal statistics for different gates could also help in estimating the effect on power and energy. See slides 7-9 for this.

Metrics

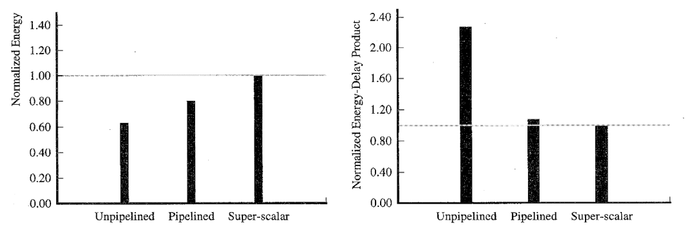

Considering that changing some parameters (e.g., supply voltage) affects more than one characteristic (e.g., power and speed), it is important to define the metric/s to be used to characterize or evaluate the system. The power-delay product (PDP) is one possible metric which has been used for some time. Energy-delay product (EDP) is another metric we can use. In Fig. 4 on the left, we can see how the energy consumption of 3 different microprocessors – unpipelined, pipelined and super-scalar. This, however, is an expected since unpipelined processors are very simple while super-scalar processors are built for speed and performance. Looking at the energy-delay product as a metric (Fig. 2 right), therefore, we can see that the super-scalar implementation has the lowest EDP and the unpipelined having more twice the EDP of super-scalar.

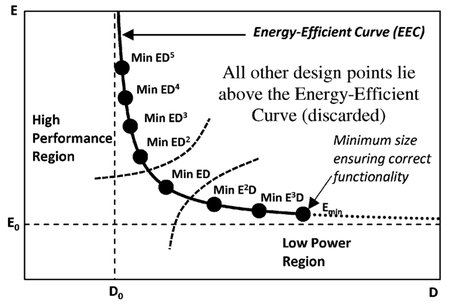

We can generalize these metrics for energy-delay trade-off as EnDm, wherein the higher n is compared to m, the more we are prioritizing energy consumption and vice versa (the higher m is compared to n, the more we are prioritizing speed). Fig. 5 shows an energy-efficient curve (EEC), which defines the minimum EnDm value.

References

- ↑ J. Rabaey, A. Chandrakasan, B. Nikolic, Digital Integrated Circuits, 2nd ed., 2002

- ↑ M. Alioto, ed., Enabling the Internet of Things from Integrated Circuits to Integrated Systems, Springer International Publishing, 2017

- ↑ Jump up to: 3.0 3.1 Tsu-Jae King's UCB EECS40 (Fall 2003) Lecture 27 Slides (link)

- ↑ R. G. Dreslinski, M. Wieckowski, D. Blaauw, D. Sylvester, and T. Mudge, Near-Threshold Computing: Reclaiming Moore’s Law Through Energy Efficient Integrated Circuits, Proc. IEEE, vol. 98, no. 2, pp. 253–266, Feb. 2010

- ↑ R. Gonzalez and M. Horowitz, Purpose Microprocessors, IEEE J. Solid State Circuits, vol. 31, no. 9, pp. 1277–1284, 1996

- ↑ M. Alioto, E. Consoli, and G. Palumbo, Analysis and comparison in the energy-delay-area domain of nanometer CMOS Flip-Flops: Part I-methodology and design strategies, IEEE Trans. Very Large Scale Integr. Syst., vol. 19, no. 5, pp. 725–736, 2011