Electronic Noise

In this module, we consider the noise generated by the electronic devices themselves due to the (1) random motion of electrons due to thermal energy and (2) the discreteness of electric charge. We see this as thermal noise in resistances, and shot noise in PN junctions. In MOS transistors, we also see flicker noise. This noise is not fundamental, but is due to the way MOS transistors are manufactured, This added uncertainty in the voltages and currents limit the smallest signal amplitude or power that our circuits can detect and/or process, limiting the transmission range and power requirements for reliable communications. Note that purely reactive elements, i.e. ideal inductors and capacitors, do not generate noise, but they can shape the frequency spectrum of the noise.

Modeling Noise

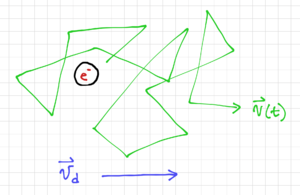

Since electronic noise is random, we cannot predict its value at any given time. However, we can describe noise in terms of its aggregate characteristics or statistics, such as its probability distribution function (pdf). As expected from the Central Limit Theorem, the pdf of the random movement of many electrons would approach a Gaussian distribution with zero mean since the electrons will move around instantaneously, but without any excitation, it will, on the average, stay in the same point in space. If we add an electric field, then the electron will move with an average drift velocity, , but at any point in time, it would be moving with an instantaneous velocity , as shown in Fig. 1. Note that is the electron mobility.

Noise Power

Aside from the mean, to describe a zero-mean Gaussian random variable, , we need the variance, , or the mean of . For random voltages or currents, , this is equal to the average power over time, normalized to :

-

(1)

-

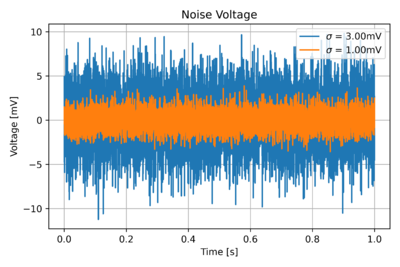

Thus, to describe noise, we can use its variance, or equivalently its average power. Fig. 2 illustrates the difference between two noise signals with different variances. Recall that , the square root of the variance, is the standard deviation.

Consider a noise voltage in time, , similar to the ones depicted in Fig. 2. The mean is then:

-

(2)

-

The variance, however, is non-zero:

-

(3)

-

We can also calculate the root-mean-square or RMS as:

-

(4)

-

Noise Power Spectrum

We can also examine the noise in the frequency domain, specifically, how noise power is distributed over frequency. Many noise sources are white, i.e. the noise power is distributed evenly across all frequencies, as seen in Fig. 3. Thus, white noise is totally unpredictable in time since there is no correlation between the noise at time and the noise at time , no matter how small is, since the likelihood of a high frequency change (small ) and a low frequency change (large ) are the same.

The noise power should be the same, whether we obtain it from the time or frequency domain. Thus, calculating the noise power in time and in frequency, and equating the two, we get:

-

(5)

-

Where is the noise power spectral density in . An alternate representation of the noise spectrum is the root spectral density, , with units of .

Signal-to-Noise Ratio

In general, we try to maximize the signal-to-noise ratio (SNR) in a communication system. For an average signal power , and an average noise power , the SNR in dB is defined as:

-

(6)

-

Again note that we are referring to random noise and not interference or distortion.