Difference between revisions of "Probability review for warm up"

Ryan Antonio (talk | contribs) (Initial commit for an initial discussion on probability review) |

Ryan Antonio (talk | contribs) |

||

| Line 35: | Line 35: | ||

=== Conditional Probability === | === Conditional Probability === | ||

| + | |||

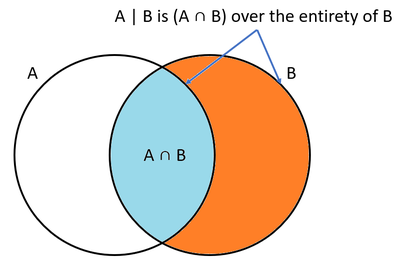

| + | [[File:A given b.PNG|thumb|right|400px| Figure 1: Simple Venn diagram showing the fractional parts for computing <math> P(A|B) </math>]] | ||

In a nutshell, ''conditional probability'' is to gain in information about an event that leads to a change in its probability. Suppose an event <math> A </math> happens with probability <math> P(A) </math>. However, when event <math> B </math> happens, this influences the probability of <math> A </math> such that we have <math> P(A|B) </math> as the conditional probability of <math> A </math> happening given <math> B </math> occurred. Mathematically we know this as: | In a nutshell, ''conditional probability'' is to gain in information about an event that leads to a change in its probability. Suppose an event <math> A </math> happens with probability <math> P(A) </math>. However, when event <math> B </math> happens, this influences the probability of <math> A </math> such that we have <math> P(A|B) </math> as the conditional probability of <math> A </math> happening given <math> B </math> occurred. Mathematically we know this as: | ||

| Line 40: | Line 42: | ||

<math> P(A|B) = \frac{P(A \cap B)}{P(B)} </math> | <math> P(A|B) = \frac{P(A \cap B)}{P(B)} </math> | ||

| − | + | Figure 1 shows a Venn diagram that can visualize this. The <math> P(A|B) </math> is the relative probability of event <math> A </math> happening with respect to event <math> B </math> happening. Therefore, it justifies that we first need to get <math> P(A \cap B) </math> then dividing this by <math> P(B) </math>. It's just a matter of shifting the scope of event <math> A </math> happening with respect to the entire space <math> S </math> to the scope of event <math> A </math> happening with respect to the space of event <math> B </math>. We can rearrange the equation to get: | |

| + | |||

| + | <math> P(A \cap B) = P(A|B)P(B) </math> | ||

| + | |||

| + | We can extend this concept to three or more sets. For example, if <math> A </math>, <math> B </math>, and <math> C </math> are some events in a sample space, then we can compute the intersection of all events as: | ||

<math> P(A \cap B \cap C) = P(C| A \cap B)P(B | A) P(A) </math> | <math> P(A \cap B \cap C) = P(C| A \cap B)P(B | A) P(A) </math> | ||

| − | [[File: | + | [[File:Simple partition.PNG|400px|thumb|right|Figure 2: Simple partition example. The entire space <math> S </math> is cut into different <math> E_i </math> parts. The event <math> A </math> is a subset of the space and it constitutes different fractions of <math> E_i </math> components.]] |

| − | |||

| − | The | ||

| − | <math> | + | Here's another interesting formula: Suppose we have a partition set <math> Y = \{E_1, E_2, E_3, ... E_n \} </math> of some sample space <math> S </math> with each <math> P(E_i) \neq 0 </math>. In other words, we just cut the sample space <math> S </math> into several partitions. Let event <math> A \ \epsilon \ Y </math>. In other words, event <math> A </math> is just a part of the partition <math> Y </math>. We have: |

| − | + | <math> P(A) = \sum_{i=1}^n P(A|E_i)P(E_i) </math> | |

| + | Figure 2 visualizes this formula. The entire space is <math> S </math> and we cut it into several <math> E_i </math> components. Suppose event <math> A </math> is also part of the entire space <math> S </math>, then it's simply the sum of all <math> P(E_i \cap A) = P(A|E_i)P(E_i) </math> components. You might wonder why the summation is from <math> i = 1 </math> up to <math> i=n </math> when we could just get the probabilities where it only matters? You are correct to think that we only need to get those that have a contribution to <math> P(A) </math> but the equation is generalized to include all partitions. For example, <math> P(E_6 \cap A) = 0 </math> because event <math> E_6 </math> and event <math> A </math> can never happen. So it's okay to generalize the formula to include all events even when their intersections don't happen at all. | ||

== Random Variables == | == Random Variables == | ||

== Exercises == | == Exercises == | ||

Revision as of 17:36, 4 February 2022

Contents

Probability Review

In this section, let's go through a quick review about probability theory. The best way to refresh ourselves is to read a few notes and go straight to problem exercises. From your EEE 137 we can summarize probability as a mathematical term which we use to investigate properties of mathematical models of chance phenomena. It is also a generalized notion of weights whereby we weigh events to see how likely they are to occur. In most cases probability is dependent on the relative frequency of events, while some look at fractions based on sets. Let's review some important properties and definitions then proceed immediately to practice exercises.

Basic Properties of Probability

Suppose we have events and which are subsets of a sample space (i.e., ). Let be the probability that event happens, and be the probability that event happens. Then some of the basic properties follow:

- and

- and . The is the null or empty set.

- if and only if and are disjoint.

- if and vice versa.

- and

- whenever and vice versa.

- . This can be extended to sets or variables. This one is left as an exercise for you.

- Let's say is a partition of then .

Principle of Symmetry

Let be a finite sample space with outcomes or events which all are physically identical or objects having the same properties and characteristics. In this case we have:

.

Subjective Probabilities

These are often expressed in terms of odds. For example, suppose a betting site is offering odds of to on Team Secret beating Team TSM. This means out of the total equally valued coins, the better is willing to bet of them that Team Secret beats Team TSM. So if the outcome is the event that Team Secret beats Team TSM then we have:

Relative Frequency

Suppose we are monitoring a particular outcome and we observe that occurs in out of experiments (or repetitions). We define the relative frequency of based on experiments as:

Conditional Probability

In a nutshell, conditional probability is to gain in information about an event that leads to a change in its probability. Suppose an event happens with probability . However, when event happens, this influences the probability of such that we have as the conditional probability of happening given occurred. Mathematically we know this as:

Figure 1 shows a Venn diagram that can visualize this. The is the relative probability of event happening with respect to event happening. Therefore, it justifies that we first need to get then dividing this by . It's just a matter of shifting the scope of event happening with respect to the entire space to the scope of event happening with respect to the space of event . We can rearrange the equation to get:

We can extend this concept to three or more sets. For example, if , , and are some events in a sample space, then we can compute the intersection of all events as:

Here's another interesting formula: Suppose we have a partition set of some sample space with each . In other words, we just cut the sample space into several partitions. Let event . In other words, event is just a part of the partition . We have:

Figure 2 visualizes this formula. The entire space is and we cut it into several components. Suppose event is also part of the entire space , then it's simply the sum of all components. You might wonder why the summation is from up to when we could just get the probabilities where it only matters? You are correct to think that we only need to get those that have a contribution to but the equation is generalized to include all partitions. For example, because event and event can never happen. So it's okay to generalize the formula to include all events even when their intersections don't happen at all.