Difference between revisions of "161-A2.1"

| Line 115: | Line 115: | ||

Given a source with alphabet <math>A=\{a_1, a_2, a_3, a_4, a_5\}</math>, with probabilities <math>P = \{0.15, 0.2, 0.25, 0.25, 0.15\}</math>, generate a Huffman code for this source. | Given a source with alphabet <math>A=\{a_1, a_2, a_3, a_4, a_5\}</math>, with probabilities <math>P = \{0.15, 0.2, 0.25, 0.25, 0.15\}</math>, generate a Huffman code for this source. | ||

| − | The first step is to sort the symbols according to their probabilities. | + | The first step is to sort the symbols according to their probabilities, as shown in Fig. 5. We then choose the two symbols with the lowest probabilities, and combine them into a new symbol, whose probability is the sum of the two combined symbols. |

== Activity: Compression-Decompression == | == Activity: Compression-Decompression == | ||

Revision as of 09:14, 19 September 2020

- Activity: Source Coding

- Instructions: In this activity, you are tasked to

- Walk through the examples.

- Write a short program to compress and decompress a redundant file.

- Should you have any questions, clarifications, or issues, please contact your instructor as soon as possible.

Contents

Example 1: Uniquely Decodable Codes

Let us try to encode a source with just four symbols in its alphabet, i.e. , with probability distribution . We can calculate the entropy of this source as:

-

(1)

-

Let us look at a few bit sequence assignments and see if they are uniquely decodable or not.

| Symbol | Probability | Code 1 | Code 2 | Code 3 | Code 4 |

|---|---|---|---|---|---|

| 0.5 | 0 | 0 | 0 | 0 | |

| 0.25 | 0 | 1 | 10 | 01 | |

| 0.125 | 1 | 00 | 110 | 011 | |

| 0.125 | 10 | 11 | 111 | 0111 | |

| Average Code Length: | 1.125 | 1.25 | 1.75 | 1.875 | |

Recall that Shannon's Noiseless Coding Theorem states that . Thus, codes 1 and 2 are not uniquely decodable since they have average code lengths less than .

For code 1, since there are two symbols sharing an encoded symbol, we can say that it is not a distinct code, and therefore not uniquely decodable. We can check code 2 by taking a sequence of symbols encoded using this code: . We can easily see that this message can be decoded in different ways, either as , or .

Code 3 has one of the most desirable properties of a code: having its average length equal to the entropy of the source, and according to Shannon, you cannot have a uniquely decodable code with an average length shorter than this. We can also see that for a sequence of symbols encoded using code 3, there is only one way you can decode the message, thus it is uniquely decodable.

Code 4 is also uniquely decodable, however, it is not instantaneous, since the decoder needs to see the start of the next symbol to determine the end of the current symbol. Also note that the average length of code 4 is longer than the .

We can use the Kraft-McMillan Inequality, , to test if a code is uniquely decodable. Tabulating the values, we get:

| Code | |

|---|---|

| 1 | 1.75 |

| 2 | 1.5 |

| 3 | 1.0 |

| 4 | 0.9375 |

As expected, codes 1 and 2 have , and thus, are not uniquely decodable, while codes 3 and 4 have , and hence, they are uniquely decodable.

Example 2: Uniformly Distributed Symbols

Let us consider the case when the symbols are uniformly distributed, i.e. . We can then calculate the entropy of the source as:

-

(2)

-

In this case, the source is incompressible. Thus, we can just use a fixed length code .

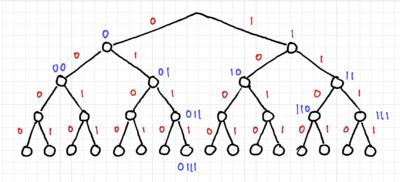

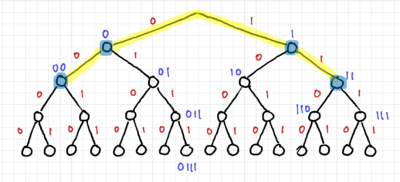

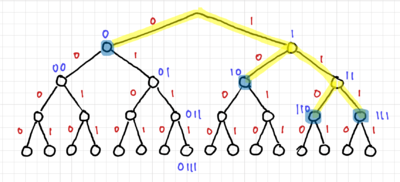

Example 3: Prefix Codes

In prefix codes or prefix-free codes, no codeword is a proper prefix of another. One way to test this is to use trees. Fig. 1 shows a binary tree with 4 levels. This three can represent codewords with lengths of 1 to 4 bits. Thus, for a prefix code, all the codewords should only be at the leaves of the tree, or equivalently, at nodes without any codewords further down the tree.

The tree for code 2 is shown in Fig. 2, and we can easily see that code 2 is not a prefix code since there are codewords within the tree. Fig. 3 shows the tree for code 3, and since all the codewords are at the edges of the tree, it is a prefix code, and thus uniquely decodable and instantaneous. Lastly, we have the tree for code 4 in Fig. 4, where only one of the codewords is at the edge of the tree. Thus, code 4 is not a prefix code.

Example 4: Huffman Coding

Huffman codes are an optimal variable-length prefix code, commonly used for lossless data compression. The algorithm for generating these codes was developed by David Huffman, and was published in his 1952 paper[1] when he was a graduate student at MIT. Similar to all our entropy-based codes, shorter codes are assigned to symbols with higher probabilities.

Coding the Source

Given a source with alphabet , with probabilities , generate a Huffman code for this source.

The first step is to sort the symbols according to their probabilities, as shown in Fig. 5. We then choose the two symbols with the lowest probabilities, and combine them into a new symbol, whose probability is the sum of the two combined symbols.