Difference between revisions of "Shannon's Communication Theory"

| Line 11: | Line 11: | ||

One very important question to ask is: "How efficiently can we encode the information that we want to send through the channel?" To answer this, let us assume: (1) the channnel is noiseless, and (2) the receiver can accurately decode the symbols transmitted through the channel. First, let us define a few things. | One very important question to ask is: "How efficiently can we encode the information that we want to send through the channel?" To answer this, let us assume: (1) the channnel is noiseless, and (2) the receiver can accurately decode the symbols transmitted through the channel. First, let us define a few things. | ||

| − | A code is ''distinct'' if each codeword is distinguishable from every other, i.e. the mapping from source messages to codewords is one-to-one. A distinct code is ''uniquely decodable'' if every codeword is identifiable when immersed in a sequence of codewords. | + | A code is '''distinct''' if each codeword is distinguishable from every other, i.e. the mapping from source messages to codewords is one-to-one. A distinct code is '''uniquely decodable''' (UD) if every codeword is identifiable when immersed in a sequence of codewords. |

Let <math>C=\{c_1, c_2, \ldots, c_r\}</math> be the alphabet of the channel, or in other words, the set of symbols that can be sent through the channel. Thus, encoding the source alphabet <math>A</math> can be expressed as a function, <math>f:A\rightarrow C^*</math>, where <math>C^*</math> is the set of all possible finite strings of symbols (or codewords) from <math>C</math>. | Let <math>C=\{c_1, c_2, \ldots, c_r\}</math> be the alphabet of the channel, or in other words, the set of symbols that can be sent through the channel. Thus, encoding the source alphabet <math>A</math> can be expressed as a function, <math>f:A\rightarrow C^*</math>, where <math>C^*</math> is the set of all possible finite strings of symbols (or codewords) from <math>C</math>. | ||

| Line 18: | Line 18: | ||

==== The Kraft-McMillan Inequality ==== | ==== The Kraft-McMillan Inequality ==== | ||

| − | A code with lengths <math>\ell_1, \ell_2, \ldots, \ell_n</math> is uniquely decodable | + | A code with lengths <math>\ell_1, \ell_2, \ldots, \ell_n</math> is uniquely decodable if and only if: |

{{NumBlk|::|<math>K=\sum_{i=1}^n \frac{1}{r^{\ell_i}}\leq 1</math>|{{EquationRef|1}}}} | {{NumBlk|::|<math>K=\sum_{i=1}^n \frac{1}{r^{\ell_i}}\leq 1</math>|{{EquationRef|1}}}} | ||

Revision as of 19:21, 14 September 2020

Contents

A First Look at Shannon's Communication Theory

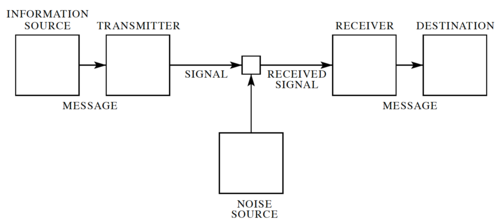

In his landmark 1948 paper[1], Claude Shannon developed a general model for communication systems, as well as a framework for analyzing these systems. The model has three components: (1) the sender or source, (2) the channel, and (3) the receiver or sink. The model also includes the transmitter that encodes the message into a signal, the receiver, for decoding the signal back into a message, as well the noise of the channel, as shown in Fig. 1.

In Shannon's discrete model, the source provides a stream of symbols from a finite alphabet, , which are then encoded. The code is sent through the channel, which could be corrupted by noise, and when the code reaches the other end, it is decoded by the receiver, and then the sink extracts information from the steam of symbols.

Note that sending information in space, e.g. from here to there is equivalent sending information in time, e.g. from now to then. Thus, Shannon's theory applies to both information transmission and information storage.

Shannon's Noiseless Coding Theorem

One very important question to ask is: "How efficiently can we encode the information that we want to send through the channel?" To answer this, let us assume: (1) the channnel is noiseless, and (2) the receiver can accurately decode the symbols transmitted through the channel. First, let us define a few things.

A code is distinct if each codeword is distinguishable from every other, i.e. the mapping from source messages to codewords is one-to-one. A distinct code is uniquely decodable (UD) if every codeword is identifiable when immersed in a sequence of codewords.

Let be the alphabet of the channel, or in other words, the set of symbols that can be sent through the channel. Thus, encoding the source alphabet can be expressed as a function, , where is the set of all possible finite strings of symbols (or codewords) from .

Let where , i.e. the length of the string of channel symbols encoding the symbol .

The Kraft-McMillan Inequality

A code with lengths is uniquely decodable if and only if:

-

(1)

-

To outline the proof, let's first evaluate :

-

(2)

-

Let . Thus, the minimum value of is , when all the codewords are 1 bit long, and the maximum is , when all the codewords have the maximum length. We can then write:

-

(3)

-

Where is the number of combinations of codewords that have a combined length of . Note that the number of distinct codewords of length is . If this code is uniquely decodable, then each sequence can represent one and only one sequence of codewords.