Difference between revisions of "Probability review for warm up"

Ryan Antonio (talk | contribs) m (→Exercise 4) |

Ryan Antonio (talk | contribs) |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

# <math> 0 \leq P(A) \leq 1</math> and <math> 0 \leq P(B) \leq 1</math> | # <math> 0 \leq P(A) \leq 1</math> and <math> 0 \leq P(B) \leq 1</math> | ||

# <math> P(S) = 1 </math> and <math> P(\varnothing) = 0</math>. The <math> \varnothing</math> is the null or empty set. | # <math> P(S) = 1 </math> and <math> P(\varnothing) = 0</math>. The <math> \varnothing</math> is the null or empty set. | ||

| − | # <math> P(A \ | + | # <math> P(A \cup B) = P(A) + P(B) </math> if and only if <math> A </math> and <math> B </math> are disjoint. |

# <math> P(A - B) = P(A) - P(B) </math> if <math> B \subseteq A </math> and vice versa. | # <math> P(A - B) = P(A) - P(B) </math> if <math> B \subseteq A </math> and vice versa. | ||

# <math> P(\bar{A}) = 1 - P(A)</math> and <math> P(\bar{B}) = 1 - P(B) </math> | # <math> P(\bar{A}) = 1 - P(A)</math> and <math> P(\bar{B}) = 1 - P(B) </math> | ||

| Line 168: | Line 168: | ||

# <math> E(X+Y) = E(X) + E(Y) </math> | # <math> E(X+Y) = E(X) + E(Y) </math> | ||

# <math> E(\alpha X) = \alpha E(X) </math> | # <math> E(\alpha X) = \alpha E(X) </math> | ||

| − | # <math> \textrm{Var}(X) = \alpha^2 \textrm{Var}(X) </math> | + | # <math> \textrm{Var}(\alpha X) = \alpha^2 \textrm{Var}(X) </math> |

== Exercises == | == Exercises == | ||

| Line 197: | Line 197: | ||

'''Solution''' | '''Solution''' | ||

| − | Let <math> P(R) = 0.4 </math>, <math> P(T) = 0.25 </math>, and <math> P(R \cap T) = 0.15 </math>. We are looking for <math> P(\ | + | Let <math> P(R) = 0.4 </math>, <math> P(T) = 0.25 </math>, and <math> P(R \cap T) = 0.15 </math>. We are looking for <math> P(\overline{R \cup T}) </math>. |

| − | Since we know that <math> P(R \cup T) = P(R) + P(T) - P(R \cap T) </math> then we can easily get <math> P(R \cup T) = 0.5 </math>. We also know that <math> P(\ | + | Since we know that <math> P(R \cup T) = P(R) + P(T) - P(R \cap T) </math> then we can easily get <math> P(R \cup T) = 0.5 </math>. We also know that <math> P(\overline{R \cup T}) = 1 - P(R \cup T) </math>. '''Therefore the answer is''' <math> P(\overline{R \cup T}) = 0.5 </math>. Elementary right? |

=== Exercise 3 === | === Exercise 3 === | ||

| Line 211: | Line 211: | ||

<math> P(RGY) = \frac{5}{25} \cdot \frac{12}{24} \cdot \frac{8}{23} = \frac{4}{115} </math> | <math> P(RGY) = \frac{5}{25} \cdot \frac{12}{24} \cdot \frac{8}{23} = \frac{4}{115} </math> | ||

| + | |||

<math> P(RYG) = \frac{5}{25} \cdot \frac{8}{24} \cdot \frac{12}{23} = \frac{4}{115} </math> | <math> P(RYG) = \frac{5}{25} \cdot \frac{8}{24} \cdot \frac{12}{23} = \frac{4}{115} </math> | ||

| Line 226: | Line 227: | ||

<math> P(F) = \frac{13}{25} \cdot \frac{12}{24} \cdot \frac{12}{23} + \frac{13}{25} \cdot \frac{12}{24} \cdot \frac{11}{23} + \frac{12}{25} \cdot \frac{13}{24} \cdot \frac{11}{23} + \frac{12}{25} \cdot \frac{11}{24} \cdot \frac{10}{23} = \frac{12}{25} </math> | <math> P(F) = \frac{13}{25} \cdot \frac{12}{24} \cdot \frac{12}{23} + \frac{13}{25} \cdot \frac{12}{24} \cdot \frac{11}{23} + \frac{12}{25} \cdot \frac{13}{24} \cdot \frac{11}{23} + \frac{12}{25} \cdot \frac{11}{24} \cdot \frac{10}{23} = \frac{12}{25} </math> | ||

| − | |||

| − | |||

=== Exercise 4 === | === Exercise 4 === | ||

| Line 300: | Line 299: | ||

(a) Here, <math> k = 4 </math> and therefore the '''answer would be''': | (a) Here, <math> k = 4 </math> and therefore the '''answer would be''': | ||

| − | <math> P(X = 4) = {20 \choose 4} \left( \frac{7}{17} \right)^4 \left( \frac{10}{17} \right)^{16} \approx 0. | + | <math> P(X = 4) = {20 \choose 4} \left( \frac{7}{17} \right)^4 \left( \frac{10}{17} \right)^{16} \approx 0.0286 </math> |

(b) Here, we need to add all probabilities from 4 to 20. Mathematically that would be: | (b) Here, we need to add all probabilities from 4 to 20. Mathematically that would be: | ||

| − | <math> P( X \geq 4) = \sum_{k=4}^20 {20 \choose k} \left( \frac{7}{17} \right)^k \left( \frac{10}{17} \right)^{20-k} </math> | + | <math> P( X \geq 4) = \sum_{k=4}^{20} {20 \choose k} \left( \frac{7}{17} \right)^k \left( \frac{10}{17} \right)^{20-k} </math> |

| − | However this is very tedious to do. Let's instead get <math> P(X < | + | However this is very tedious to do. Let's instead get <math> P(X \leq 3) </math> (or <math> P(X<4) </math>) and compute <math> P(X \geq 4) = 1 - P(X \leq 3) </math>. We would have: |

| − | <math> P( X \geq 4) = 1 - P( X | + | <math> P( X \geq 4) = 1 - P( X \leq 3) = 1 - \sum_{k=0}^3 {20 \choose k} \left( \frac{7}{17} \right)^k \left( \frac{10}{17} \right)^{20-k} </math> |

Expanding and plugging in values gives us: | Expanding and plugging in values gives us: | ||

| − | <math> P( X \geq 4) = 1 - \left[ {20 \choose 0} \left( \frac{7}{17} \right)^0 \left( \frac{10}{17} \right)^{20} + {20 \choose 1} \left( \frac{7}{17} \right)^1 \left( \frac{10}{17} \right)^{19} + {20 \choose 2} \left( \frac{7}{17} \right)^2 \left( \frac{10}{17} \right)^{18} + {20 \choose 3} \left( \frac{7}{17} \right)^3 \left( \frac{10}{17} \right)^{17}\right] \approx 0. | + | <math> P( X \geq 4) = 1 - \left[ {20 \choose 0} \left( \frac{7}{17} \right)^0 \left( \frac{10}{17} \right)^{20} + {20 \choose 1} \left( \frac{7}{17} \right)^1 \left( \frac{10}{17} \right)^{19} + {20 \choose 2} \left( \frac{7}{17} \right)^2 \left( \frac{10}{17} \right)^{18} + {20 \choose 3} \left( \frac{7}{17} \right)^3 \left( \frac{10}{17} \right)^{17}\right] \approx 0.9877 </math> |

| − | |||

== References == | == References == | ||

Latest revision as of 17:33, 17 February 2022

Probability Review

In this section, let's go through a quick review about probability theory. The best way to refresh ourselves is to read a few notes and go straight to problem exercises. From your EEE 137 we can summarize probability as a mathematical term which we use to investigate properties of mathematical models of chance phenomena. It is also a generalized notion of weights whereby we weigh events to see how likely they are to occur. In most cases probability is dependent on the relative frequency of events, while some look at fractions based on sets. Let's review some important properties and definitions then proceed immediately to practice exercises.

Basic Properties of Probability

Suppose we have events and which are subsets of a sample space (i.e., ). Let be the probability that event happens, and be the probability that event happens. Then some of the basic properties follow:

- and

- and . The is the null or empty set.

- if and only if and are disjoint.

- if and vice versa.

- and

- whenever and vice versa.

- . This can be extended to sets or variables. This one is left as an exercise for you.

- Let's say is a partition of then .

Principle of Symmetry

Let be a finite sample space with outcomes or events which all are physically identical or objects having the same properties and characteristics. In this case we have:

.

Subjective Probabilities

These are often expressed in terms of odds. For example, suppose a betting site is offering odds of to on Team Secret beating Team TSM. This means out of the total equally valued coins, the better is willing to bet of them that Team Secret beats Team TSM. So if the outcome is the event that Team Secret beats Team TSM then we have:

Relative Frequency

Suppose we are monitoring a particular outcome and we observe that occurs in out of experiments (or repetitions). We define the relative frequency of based on experiments as:

Conditional Probability

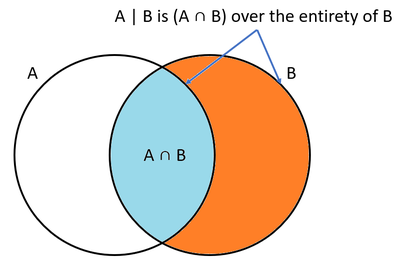

In a nutshell, conditional probability is to gain in information about an event that leads to a change in its probability. Suppose an event happens with probability . However, when event happens, this influences the probability of such that we have as the conditional probability of happening given occurred. Mathematically we know this as:

Figure 1 shows a Venn diagram that can visualize this. The is the relative probability of event happening with respect to event happening. Therefore, it justifies that we first need to get then dividing this by . It's just a matter of shifting the scope of event happening with respect to the entire space to the scope of event happening with respect to the space of event . We can rearrange the equation to get:

We can extend this concept to three or more sets. For example, if , , and are some events in a sample space, then we can compute the intersection of all events as:

Here's another interesting formula: Suppose we have a partition set of some sample space with each . In other words, we just cut the sample space into several partitions. Let event . In other words, event is just a part of the partition . We have:

Figure 2 visualizes this formula. The entire space is and we cut it into several components. Suppose event is also part of the entire space , then it's simply the sum of all components. You might wonder why the summation is from up to when we could just get the probabilities where it only matters? You are correct to think that we only need to get those that have a contribution to but the equation is generalized to include all partitions. For example, because event and event can never happen. So it's okay to generalize the formula to include all events even when their intersections don't happen at all.

Bayes' Theorem

Bayes' theorem is an extension of the conditional probability. Consider again that we have events and of some sample space . Bayes' theorem states that:

We can rearrange it to be aesthetically symmetric:

Bayes' theorem can be very useful to describe the probability of an event that is based on prior knowledge of conditions that may be related to the event.

Independence

In ordinary language, independence usually means that two experiences are completely separate. If an event happens it has no effect on the other. Given events and are independent, then the following should hold true:

Random Variables

A discrete random variable is a variable whose value is uncertain. In practice this random variable is just a mapping of different outcomes and their respective probabilities of occurring associated to a single variable only. We usually denote random variables with capital letters, just like . For example, let a discrete random variable represent the scenarios the sum of two independent die rolls. The set of possible outcomes would be:

Now, each outcome would have some probability associated with it. The list below shows the pair-wise mapping. We'll leave it as an exercise for you to determine how to get these probabilities.

The mapping between the outcomes and their corresponding probabilities is called a probability distribution. It describes the chances of the random variable to be one of the outcomes. It is common to visualize discrete random variables with bar graphs. Figure 3 shows the probability distribution of our two die rolls example. It is more convenient to write .

Expectations and Variances

Whenever we are come across a random variable, we are always interested in the characteristics of the distribution. Particularly on expectation and variance. The expectation of a random variable is:

The expectation is simply a linear combination of the outcome value and the probability of that value to occur. If you think about it carefully, we are trying to find what is the "expected" tendency of the value of the random variable. We also call the expectation as the mean of the random variable. We also denote the mean using the symbol. If the outcomes are equiprobable, then we arrive at the well known "average" equation. For example, if the random variable has outcomes and they are uniformly distributed (i.e., ) then the expectation equation translates to:

Variance is a measure of how much can the random variable deviate from the mean . We write the variance as:

We usually write variance with . Take note that the units of variance is in . We sometimes use the term standard deviation instead so that we get the original units. This is:

Different Distributions of Discrete Random Variables

Sometimes it's useful to recognize some well-established distributions for random variables. Here are a few examples.

Uniform Distribution

Given some random variable with unique outcomes, the probability distribution is:

We can compute the mean and variance using the appropriate equations mentioned earlier.

Binomial Distribution

Binomial random variables is an experiment with exactly two possible outcomes. For example, if we flip a fair coin (), what is the probability of getting a head? The binomial distribution describes the problem: What is the probability of exactly heads in a sequence of independent trials. In equation form we write this as:

Take note that the function is dependent on trials and the probability where we get a success. Binomial distribution is also known as Bernoulli trials. Conveniently, the mean is and the variance is .

Poisson Distribution

The Poisson distribution is an extension of the binomial distribution but for rare events. The assumptions for the Poisson distribution include and . The number of trials need to be large and the event that we are looking into needs to be rare. Without going through the nitty gritty derivation, the Poisson distribution is given by:

The mean and variance are . In problems that need to use the Poisson distribution, the mean should be given.

Joint Distribution

Suppose we have a random variable with range and with some probability mapping . Say we also have another random variable with range and with some probability mapping . We define a joint probability distribution of and by:

For and .

Algebra of Random Variables

There are some useful algebra operations for random variables. Below is a short list and should be self explanatory:

- - Adding two random variables.

- - Adding a constant to a random variable.

- - Multiplying some scalar to a random variable.

- - Multiplying two random variables together.

Some interesting properties for mean and variance include:

Exercises

Exercise 1

A fair die is thrown. Find the probability of (a) throwing a 6, (b) throwing an even number, (c) not throwing a 5, and (d) throwing a number less than 3 or more than 5.

Solution

Simply list all the possible outcomes of the values that a fair die can throw .

(a) Suppose the event . There is only 1 case out of the 6. Therefore the answer is .

(b) Suppose event . Therefore the answer is .

(c) Suppose event . Therefore the answer is .

(d) Suppose event . Therefore the answer is .

Exercise 2

Suppose that, in a certain population, 40% of the people have red hair, 25% have tuberculosis, and 15% have both. What percentage has neither?

Solution

Let , , and . We are looking for .

Since we know that then we can easily get . We also know that . Therefore the answer is . Elementary right?

Exercise 3

An urn contains 5 red, 12 green, and 8 yellow balls. The three are drawn without replacement. (a) What is the probability that a red, a green, and a yellow ball will be drawn (in any order)? (b) What is the probability that the last ball to be drawn will be green?

Solution

(a) This problem is worthy of an extensive discussion. First, let's call the event to be the events that a red, green, and yellow will be drawn in any order. We would have . Where is for red, is for green, and is for yellow. Since we don't really care about the order, , , and all the rest are equivalent. Observe that:

All other combinations will still have the same probability. Therefore we only need to get one probability, say and multiply it to the different combinations which is 6!

Hence the final answer would be .

Sounds simple right? There's more to this. The and all other combinations are actually the translation of . Now let's dissect the equation carefully. The first draw is . The second draw is . The third draw is . Explicitly we have .

Doesn't this sound familiar? You're right! It follows the formula of . Take note that .

(b) This time we have a different set of outcomes. Let's set this as event . While keeping the notion of conditional probability in mind. The final answer would be:

Exercise 4

Consider the table below which describes the babies born in one week at a certain hospital. If a baby is chosen at random and turns out to be a boy, find the probability that it was born to a mother over the age of 30 years.

|

Number of babies born which are: |

Born to mothers over 30 years old |

Born to mothers less than 30 years old |

|

Boys |

34 |

49 |

|

Girls |

41 |

44 |

Solution

This is a simple counting exercise. Let be the event a baby is born to a mother who is more than 30 years old. Let be the event that a baby is a boy. We have and . Note that the operation is the cardinality or magnitude which counts the number of items or elements. Therefore the final answer is .

Another interpretation would be to get the probabilities and with respect to the entire space. Adding all numbers gives us . Therefore and . Trivially, .

Exercise 5

Three fair coins are tossed in succession. Find the probability distribution of the random variable indicating the total number of heads. Show a bar graph of this distribution. Find the mean, variance, and standard deviation.

Solution

Easy! The list of possible outcomes would be and their probability mapping would be:

Figure 4 shows the bar graph for this problem. The mean, variance, and standard deviation are:

Exercise 6

Suppose an urn contains 7 red and 10 green balls, and 20 balls are drawn with replacement and mixing after each draw. What is the probability that (a) exactly 4, or (b) at least 4, of the balls are red?

Solution

First the probability of successfully drawing a red is . Probability of drawing NOT a red is . We can use the binomial probability distribution equation in here. Let . Recall that:

(a) Here, and therefore the answer would be:

(b) Here, we need to add all probabilities from 4 to 20. Mathematically that would be:

However this is very tedious to do. Let's instead get (or ) and compute . We would have:

Expanding and plugging in values gives us:

References

- Applebaum, D. , Probability and Information: An Integrated Approach, Cambridge University Press, 2008.

- Hankerson, D.R., Harris, G.A., Johnson, P.D. , Introduction to Information Theory and Data Compression, CRC Press, 2003.

![{\displaystyle P(X\geq 4)=1-\left[{20 \choose 0}\left({\frac {7}{17}}\right)^{0}\left({\frac {10}{17}}\right)^{20}+{20 \choose 1}\left({\frac {7}{17}}\right)^{1}\left({\frac {10}{17}}\right)^{19}+{20 \choose 2}\left({\frac {7}{17}}\right)^{2}\left({\frac {10}{17}}\right)^{18}+{20 \choose 3}\left({\frac {7}{17}}\right)^{3}\left({\frac {10}{17}}\right)^{17}\right]\approx 0.9877}](https://en.wikipedia.org/api/rest_v1/media/math/render/svg/ce69f982780f9cf815cfe6332f5d1e0998011045)