Difference between revisions of "Shannon's Communication Theory"

| (118 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

[[File:Shannon comm system.png|thumb|500px|Figure 1: A general communication system<ref name="shannon1948"/>.]] | [[File:Shannon comm system.png|thumb|500px|Figure 1: A general communication system<ref name="shannon1948"/>.]] | ||

| − | In his landmark 1948 paper<ref name="shannon1948">C. E. Shannon, A Mathematical Theory of Communication, The Bell System Technical Journal, Vol. 27, pp. 379–423, 623–656, July, October, 1948. ([http://people.math.harvard.edu/~ctm/home/text/others/shannon/entropy/entropy.pdf pdf])</ref>, Claude Shannon developed a general model for communication systems, as well as a framework for analyzing these systems. The model has three components: (1) the sender or source, (2) the channel, and (3) the receiver or sink. The model also includes | + | In his landmark 1948 paper<ref name="shannon1948">C. E. Shannon, A Mathematical Theory of Communication, The Bell System Technical Journal, Vol. 27, pp. 379–423, 623–656, July, October, 1948. ([http://people.math.harvard.edu/~ctm/home/text/others/shannon/entropy/entropy.pdf pdf])</ref>, Claude Shannon developed a general model for communication systems, as well as a framework for analyzing these systems. The model has three components: (1) the sender or source, (2) the channel, and (3) the receiver or sink. The model also includes the transmitter that encodes the message into a signal, the receiver, for decoding the signal back into a message, as well the noise of the channel, as shown in Fig. 1. |

| + | In Shannon's discrete model, the source provides a stream of symbols from a finite alphabet, <math>A=\{a_1, a_2, \ldots, a_n\}</math>, which are then encoded. The code is sent through the channel, which could be corrupted by noise, and when the code reaches the other end, it is decoded by the receiver, and then the sink extracts information from the steam of symbols. | ||

| + | Note that sending information in space, e.g. from ''here'' to ''there'' is equivalent sending information in time, e.g. from ''now'' to ''then''. Thus, Shannon's theory applies to both information transmission and information storage. | ||

| + | === Shannon's Noiseless Coding Theorem === | ||

| + | One very important question to ask is: "How efficiently can we encode the information that we want to send through the channel?" To answer this, let us assume: (1) the channel is noiseless, and (2) the receiver can accurately decode the symbols transmitted through the channel. First, let us define a few things. | ||

| − | + | A '''code''' is defined as a mapping from a source alphabet to a code alphabet. The process of transforming a source message into a coded message is '''coding''' or '''encoding'''. The encoded message may be referred to as an encoding of the source message. The algorithm which constructs the mapping and uses it to transform the source message is called the '''encoder'''. The '''decoder''' performs the inverse operation, restoring the coded message to its original form. | |

| − | == | + | A code is '''distinct''' if each codeword is distinguishable from every other, i.e. the mapping from source messages to codewords is one-to-one. A distinct code is '''uniquely decodable''' (UD) if every codeword is identifiable when immersed in a sequence of codewords. A uniquely decodable code is a '''prefix code''' (or ''prefix-free code'') if it has the prefix property, which requires that no codeword is a proper prefix of any other codeword. Prefix codes are '''instantaneously decodable''', i.e. they have the desirable property that the coded message can be parsed into codewords without waiting for the end of the message. |

| + | |||

| + | ==== The Kraft-McMillan Inequality ==== | ||

| + | [[File:Encoder.png|thumb|500px|Figure 1: An encoder.]] | ||

| + | Let <math>C=\{c_1, c_2, \ldots, c_r\}</math> be the alphabet of the channel, or in other words, the set of symbols that can be sent through the channel. Thus, encoding the source alphabet <math>A</math> can be expressed as a function, <math>f:A\rightarrow C^*</math>, where <math>C^*</math> is the set of all possible finite strings of symbols (or codewords) from <math>C</math>, as seen in Fig. 1. | ||

| + | |||

| + | Let <math>\ell_i=\left|f\left(a_i\right)\right|</math> where <math>i=1,2,\ldots,n</math>, i.e. the length of the string of channel symbols encoding the symbol <math>a_i\in A</math>. | ||

| + | |||

| + | A code with lengths <math>\ell_1, \ell_2, \ldots, \ell_n</math> is uniquely decodable if and only if: | ||

| + | |||

| + | {{NumBlk|::|<math>K=\sum_{i=1}^n \frac{1}{r^{\ell_i}}\leq 1</math>|{{EquationRef|1}}}} | ||

| + | |||

| + | The proof of the Kraft-McMillan Inequality is interesting since it starts with evaluating <math>K^m</math>: | ||

| + | |||

| + | {{NumBlk|::|<math>K^m=\left(\sum_{i=1}^n \frac{1}{r^{\ell_i}}\right)^m = \sum_{i_1=1}^n \sum_{i_1=1}^n \cdots \sum_{i_m=1}^n \frac{1}{r^{\ell_{i_1} + \ell_{i_2} + \ldots + \ell_{i_m}}}</math>|{{EquationRef|2}}}} | ||

| + | |||

| + | Let <math>\ell = \max\left(\ell_1, \ell_2, \ldots, \ell_n\right)</math>. Thus, the minimum value of <math>\ell_{i_1} + \ell_{i_2} + \ldots + \ell_{i_m}</math> is <math>m</math>, when all the codewords are 1 bit long, and the maximum is <math>m\ell</math>, when all the codewords have the maximum length. We can then write: | ||

| + | |||

| + | {{NumBlk|::|<math>K^m=\sum_{k=m}^{m\ell} \frac{N_k}{r^k}</math>|{{EquationRef|3}}}} | ||

| + | |||

| + | Where <math>N_k</math> is the number of combinations of <math>m</math> codewords that have a combined length of <math>k</math>. Note that the number of distinct codewords of length <math>k</math> is <math>r^k</math>. If this code is uniquely decodable, then each sequence can represent one and only one sequence of codewords. Therefore, the number of possible combinations of codewords whose combined length is <math>k</math> cannot be greater than <math>r^k</math>, or: | ||

| + | |||

| + | {{NumBlk|::|<math>N_k \leq r^k</math>|{{EquationRef|4}}}} | ||

| + | |||

| + | We can then write: | ||

| + | |||

| + | {{NumBlk|::|<math>K^m\leq\sum_{k=m}^{m\ell} \frac{r^k}{r^k} = m\ell - m + 1</math>|{{EquationRef|5}}}} | ||

| + | |||

| + | Thus, we can conclude that <math>K\leq 1</math> since if this were not true, <math>K^m</math> would exceed <math>m\ell-m + 1</math> for large <math>m</math>. | ||

| + | |||

| + | ==== Shannon's Noiseless Coding Theorem ==== | ||

| + | Let <math>Q_i</math> be equal to: | ||

| + | |||

| + | {{NumBlk|::|<math>Q_i=\frac{r^{-\ell_i}}{K}</math>|{{EquationRef|6}}}} | ||

| + | |||

| + | We call the set of numbers <math>Q_i</math> ''pseudo-probabilities'' since <math>0<Q_i\leq1</math> for all <math>i</math>, and | ||

| + | |||

| + | {{NumBlk|::|<math>\sum_{i=1}^n Q_i=1</math>|{{EquationRef|7}}}} | ||

| + | |||

| + | If <math>p_i</math> is the probability of observing <math>a_i</math> in the data stream, then we can apply the Gibbs Inequality to get: | ||

| + | |||

| + | {{NumBlk|::|<math>\sum_{i=1}^n p_i\log_2\left(\frac{Q_i}{p_i}\right)\leq 0</math>|{{EquationRef|8}}}} | ||

| + | |||

| + | Rewriting, we get: | ||

| + | |||

| + | {{NumBlk|::|<math>\sum_{i=1}^n p_i\log_2\left(\frac{1}{p_i}\right)\leq \sum_{i=1}^n p_i\log_2\left(\frac{1}{Q_i}\right)=\sum_{i=1}^n p_i\log_2\left(\frac{K}{r^{-\ell_i}}\right)</math>|{{EquationRef|9}}}} | ||

| + | |||

| + | Note that the left hand term is the entropy of the source, <math>H\left(S\right)</math> and for <math>K\leq 1</math>, we get: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(S\right) \leq \sum_{i=1}^n p_i\left(\log_2\left(K\right)-\log_2\left(r^{-\ell_i}\right)\right)=\log_2\left(K\right)+ \sum_{i=1}^n p_i\ell_i\log_2\left(r\right) \leq \log_2\left(r\right) \sum_{i=1}^n p_i\ell_i</math>|{{EquationRef|10}}}} | ||

| + | |||

| + | If we define the average length of codewords, <math>L = \sum_{i=1}^n p_i\ell_i</math>, and rewriting: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(S\right) \leq L\log_2\left(r\right)</math>|{{EquationRef|11}}}} | ||

| + | |||

| + | In other words, the entropy of the source gives us a lower bound on the average code length for any uniquely decodable symbol-by-symbol encoding of the source message. For binary encoding, where <math>r=2</math>, we arrive at: | ||

| + | |||

| + | {{NumBlk|::|<math>H\left(S\right) \leq L</math>|{{EquationRef|12}}}} | ||

| + | |||

| + | Shannon went beyond this and showed that this bound holds even if we group symbols together into "words" before doing our encoding. The generalized form of this inequality is called ''Shannon's Noiseless Coding Theorem''. | ||

| + | |||

| + | {{Note|[[161-A2.1 | '''Activity A2.1''' Source Coding]] -- This activity introduces the concept of source coding in general, and Huffman codes in particular.|reminder}} | ||

== Sources == | == Sources == | ||

| − | * [http://astarte.csustan.edu/~tom/SFI-CSSS/info-theory/info-lec.pdf | + | * Tom Carter's [http://astarte.csustan.edu/~tom/SFI-CSSS/info-theory/info-lec.pdf notes] on Information Theory |

| + | * Dan Hirschberg's [https://www.ics.uci.edu/~dan/pubs/DC-Sec1.html notes] on Data Compression | ||

== References == | == References == | ||

<references /> | <references /> | ||

Latest revision as of 16:52, 19 September 2020

Contents

A First Look at Shannon's Communication Theory

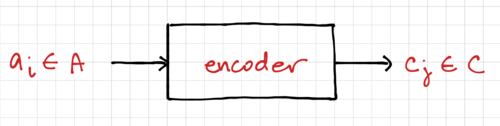

In his landmark 1948 paper[1], Claude Shannon developed a general model for communication systems, as well as a framework for analyzing these systems. The model has three components: (1) the sender or source, (2) the channel, and (3) the receiver or sink. The model also includes the transmitter that encodes the message into a signal, the receiver, for decoding the signal back into a message, as well the noise of the channel, as shown in Fig. 1.

In Shannon's discrete model, the source provides a stream of symbols from a finite alphabet, , which are then encoded. The code is sent through the channel, which could be corrupted by noise, and when the code reaches the other end, it is decoded by the receiver, and then the sink extracts information from the steam of symbols.

Note that sending information in space, e.g. from here to there is equivalent sending information in time, e.g. from now to then. Thus, Shannon's theory applies to both information transmission and information storage.

Shannon's Noiseless Coding Theorem

One very important question to ask is: "How efficiently can we encode the information that we want to send through the channel?" To answer this, let us assume: (1) the channel is noiseless, and (2) the receiver can accurately decode the symbols transmitted through the channel. First, let us define a few things.

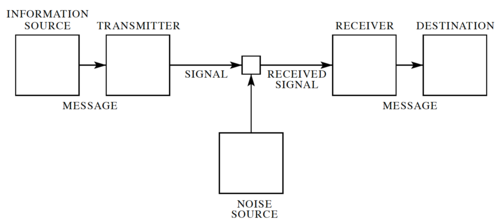

A code is defined as a mapping from a source alphabet to a code alphabet. The process of transforming a source message into a coded message is coding or encoding. The encoded message may be referred to as an encoding of the source message. The algorithm which constructs the mapping and uses it to transform the source message is called the encoder. The decoder performs the inverse operation, restoring the coded message to its original form.

A code is distinct if each codeword is distinguishable from every other, i.e. the mapping from source messages to codewords is one-to-one. A distinct code is uniquely decodable (UD) if every codeword is identifiable when immersed in a sequence of codewords. A uniquely decodable code is a prefix code (or prefix-free code) if it has the prefix property, which requires that no codeword is a proper prefix of any other codeword. Prefix codes are instantaneously decodable, i.e. they have the desirable property that the coded message can be parsed into codewords without waiting for the end of the message.

The Kraft-McMillan Inequality

Let be the alphabet of the channel, or in other words, the set of symbols that can be sent through the channel. Thus, encoding the source alphabet can be expressed as a function, , where is the set of all possible finite strings of symbols (or codewords) from , as seen in Fig. 1.

Let where , i.e. the length of the string of channel symbols encoding the symbol .

A code with lengths is uniquely decodable if and only if:

-

(1)

-

The proof of the Kraft-McMillan Inequality is interesting since it starts with evaluating :

-

(2)

-

Let . Thus, the minimum value of is , when all the codewords are 1 bit long, and the maximum is , when all the codewords have the maximum length. We can then write:

-

(3)

-

Where is the number of combinations of codewords that have a combined length of . Note that the number of distinct codewords of length is . If this code is uniquely decodable, then each sequence can represent one and only one sequence of codewords. Therefore, the number of possible combinations of codewords whose combined length is cannot be greater than , or:

-

(4)

-

We can then write:

-

(5)

-

Thus, we can conclude that since if this were not true, would exceed for large .

Shannon's Noiseless Coding Theorem

Let be equal to:

-

(6)

-

We call the set of numbers pseudo-probabilities since for all , and

-

(7)

-

If is the probability of observing in the data stream, then we can apply the Gibbs Inequality to get:

-

(8)

-

Rewriting, we get:

-

(9)

-

Note that the left hand term is the entropy of the source, and for , we get:

-

(10)

-

If we define the average length of codewords, , and rewriting:

-

(11)

-

In other words, the entropy of the source gives us a lower bound on the average code length for any uniquely decodable symbol-by-symbol encoding of the source message. For binary encoding, where , we arrive at:

-

(12)

-

Shannon went beyond this and showed that this bound holds even if we group symbols together into "words" before doing our encoding. The generalized form of this inequality is called Shannon's Noiseless Coding Theorem.