Joint Entropies

In this module, we'll discuss several extensions of entropy. Let's begin with joint entropy. Suppose we have a random variable  with elements

with elements  and random variable

and random variable  with elements

with elements  . We define the joint entropy of

. We define the joint entropy of  and

and  as:

as:

-

|

|

(1)

|

Take note that  . This is trivial. From our previous discussion about the decision trees of a game, we used joint entropies to calculate the overall entropy.

. This is trivial. From our previous discussion about the decision trees of a game, we used joint entropies to calculate the overall entropy.

Conditional Entropies

There are two steps to understand conditional entropies. The first is the uncertainty of a random variable caused by a single outcome only. Suppose we have the same random variables  and

and  defined earlier in joint entropies. Let's denote

defined earlier in joint entropies. Let's denote  as the conditional probability of

as the conditional probability of  when event

when event  happened. We define the entropy

happened. We define the entropy  as the entropy of the random variable

as the entropy of the random variable  given a

given a  happened. In other words, this is the average information of all the outcomes of

happened. In other words, this is the average information of all the outcomes of  when event

when event  happens. Take note that we're only interested in the entropy of

happens. Take note that we're only interested in the entropy of  when only the outcome

when only the outcome  occurred. Mathematically this is:

occurred. Mathematically this is:

-

|

|

(2)

|

Just to repeat because it may be confusing, equation 2 just pertains to the uncertainty when only a single event happened. We can extend this to the total entropy  when any of the outcomes in

when any of the outcomes in  happens. If we treat

happens. If we treat  like a random variable that contains a range of

like a random variable that contains a range of  and the probability distribution for each associated

and the probability distribution for each associated  is

is  so that

so that  is a function of

is a function of  . Then we define

. Then we define  as the conditional probability of

as the conditional probability of  given

given  as:

as:

-

|

|

(3)

|

Substituting equation 2 to equation 3 and knowing that  . We can re-write this as:

. We can re-write this as:

-

|

|

(3)

|

Note that it is trivial to prove that  if

if  and

and  are independent. We'll leave it up to you to prove this. A few hints include:

are independent. We'll leave it up to you to prove this. A few hints include:  and

and  if

if  and

and  are independent.

are independent.

Binary Tree Example

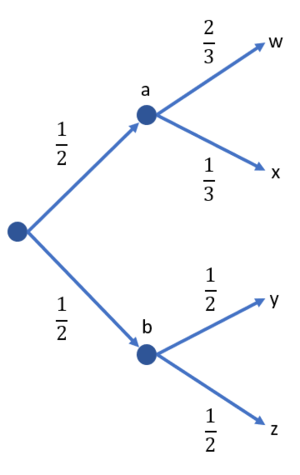

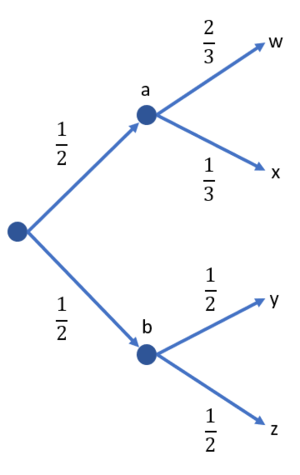

Figure 1: Binary tree example.

Let's apply the first two concepts in a simple binary tree example. Suppose we let  be a random variable with outcomes

be a random variable with outcomes  and probabilities

and probabilities  . Let

. Let  be the random variable with outcomes

be the random variable with outcomes  . We are also given the probabilities

. We are also given the probabilities  ,

,  ,

,  , and

, and  . Figure 1 shows the binary tree. Calculate:

. Figure 1 shows the binary tree. Calculate:

(a)

(b)

(c)

(d)

Solution

(a) Use equation 2 to solve

(b) Same as in (a), use equation 2 to solve

(c) Can be solved in two ways. First is to use equation 3.

Or, we can solve it using the alternative version of equation 3. But we also need to know the joint probabilities:

(d) We already listed the joint probabilities in (c). We simply use equation 1 for this:

Important Note!

This example actually shows us an important observation:

-

|

|

(4)

|

We can easily observe that this holds true for the binary tree example. This has an important interpretation: The combined uncertainty in  and

and  (i.e.,

(i.e.,  ) is the sum of that uncertainty which is totally due to

) is the sum of that uncertainty which is totally due to  (i.e.,

(i.e.,  ), and that which is still due to

), and that which is still due to  once

once  has been accounted for (i.e.,

has been accounted for (i.e.,  ).

).

Since  it also follows that:

it also follows that:

-

|

|

(5)

|

If  and

and  are independent then:

are independent then:

-

|

|

(6)

|

An alternative interpretation to  is the information content of

is the information content of  which is NOT contained in

which is NOT contained in  .

.

Mutual Information

With the final discussion on the properties of joint and conditional entropies, we also define mutual information as the information content of  contained within

contained within  written as:

written as:

-

|

|

(7)

|

Or:

-

|

|

(7)

|

If we plug in the definitions of entropy and conditional entropy, mutual information in expanded form is:

-

|

|

(8)

|

It also follows that:

. This is trivial from equation 7.

. This is trivial from equation 7.- If

and

and  are independent, then

are independent, then  . This is also trivial from equation 8.

. This is also trivial from equation 8.

As a short example, we can use the binary tree problem again. Let's compute  . We simply need to use equation 7:

. We simply need to use equation 7:  . We already know

. We already know  bits.

bits.  is computed by first knowing

is computed by first knowing  . Recall that:

. Recall that:

But then, for each outcome in  has the same probability of the joint entropy. For example, event

has the same probability of the joint entropy. For example, event  only occurs if event

only occurs if event  occurred first. Event

occurred first. Event  occurs only if event

occurs only if event  occurred first. So it means that:

occurred first. So it means that:

Therefore,  bits. Therefore, the information

bits. Therefore, the information  bits.

bits.

Chain Rule for Conditional Entropy

What happens when we deal with more than two random variables? To facilitate the discussion, let us recall the chain rule for joint distributions.

Let  be a sequence of discrete random variables. Then, their joint distribution can be factored as follows

be a sequence of discrete random variables. Then, their joint distribution can be factored as follows

,

,

Chain rule for entropy

The chain rule for (joint) entropy is very similar to the above expansion, but we use additions instead of multiplications.

-

|

|

(9)

|

Although we do not supply a complete proof here, this fact should not be too surprising since entropy operates on logarithms of probabilities and logarithms of product terms expand to sums of logarithms.

Proof of chain rule for

Let us see that the statement is true for  . Let

. Let  be three discrete random variables. The idea with the proof is that we operate on two random variables at a time, since prior to the chain rule we only know that

be three discrete random variables. The idea with the proof is that we operate on two random variables at a time, since prior to the chain rule we only know that  . We can write

. We can write  , where we bundle

, where we bundle  and

and  into one random variable

into one random variable  .

.

The proof now proceeds as follows:

![{\displaystyle H(X_{1},X_{2},X_{3})=H((X_{1},X_{2}),X_{3})=H(X_{1},X_{2})+H(X_{3}|X_{1},X_{2})=[H(X_{1})+H(X_{2}|X_{1})]+H(X_{3}|X_{1},X_{2})}](https://en.wikipedia.org/api/rest_v1/media/math/render/svg/1e814d88da43a98bed63adf8f11bc71e815d00c0)

One interpretation of the chain rule shown is that to obtain the total information (joint entropy) of  as a whole, we can

as a whole, we can

- obtain information about

first without any prior knowledge:

first without any prior knowledge:  , then

, then

- obtain information about

with knowledge of

with knowledge of  :

:  , then

, then

- obtain information about

with knowledge of

with knowledge of  and

and  :

:  .

.

For  , we can proceed by induction. Below is the sketch of the proof:

, we can proceed by induction. Below is the sketch of the proof:

- (Base step) For a fixed

, assume that any collection of

, assume that any collection of  random variables satisfies the chain rule.

random variables satisfies the chain rule.

- (Induction step) When

, write the joint entropy of

, write the joint entropy of  random variables as follows:

random variables as follows:  , where the first

, where the first  are bundled together into one random variable.

are bundled together into one random variable.

- Use the two-variable chain rule

and the base step to show that

and the base step to show that  .

.

A few things to note:

- The order of "obtaining information" is irrelevant in calculating the joint entropy of multiple rvs. Write the chain rule if we proceed by obtaining information in the following order:

.

.

- The chain we have now works for any collection of random variables. Can you figure out how to simplify the chain rule for Markov chains? (The answer will be discussed in Module 3.)

Graphical Summary

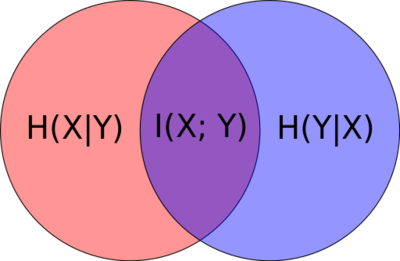

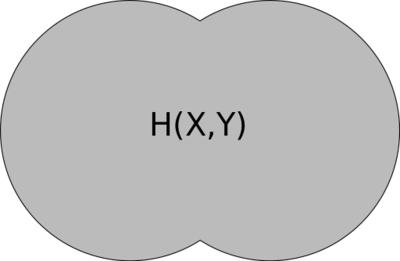

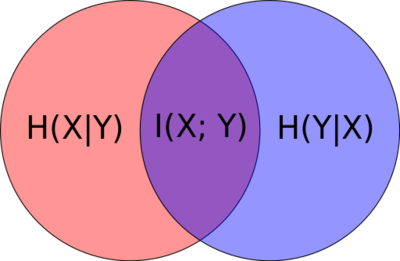

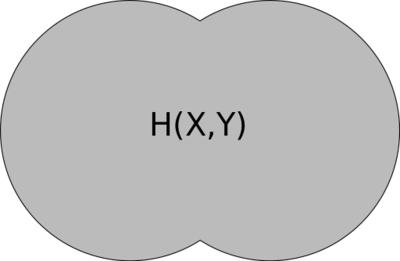

Figure 2 shows how we can visualize conditional entropy and mutual information. The red and blue pertain to the individual entropies  respectively. Figure 3 shows what joint entropy looks like.

respectively. Figure 3 shows what joint entropy looks like.

Figure 2: Venn diagram visualizing conditional entropy and mutual information.

Figure 3: Venn diagram visualizing joint entropy.

From the diagrams you should be able to recall the entropy relationships on the fly. We can summarize (and derive new relationships) as:

From the diagrams, it's easy to re-translate

(or

) as

the uncertainty of  without the

without the  happening

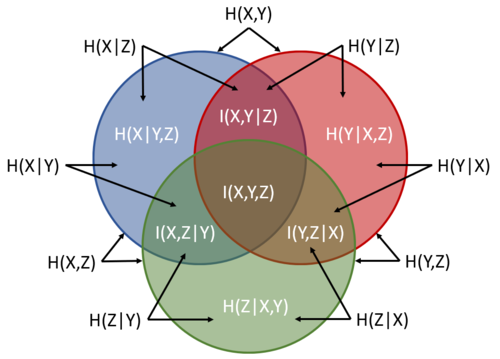

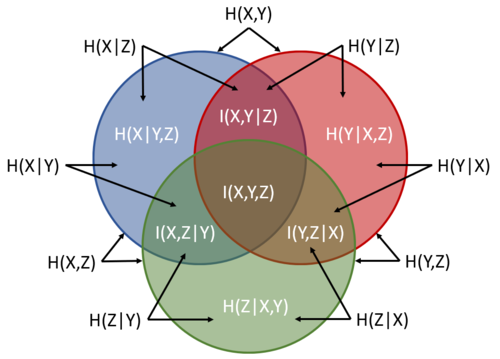

happening Of course we, can extend this concept to three variables. For example, figure 4 shows a Venn diagram for three sets. The entire blue circle is  , the entire red is

, the entire red is  , and the entire green is

, and the entire green is  . The labels are there to guide you.

. The labels are there to guide you.

Figure 4: Entropy Venn diagram from three sets.

We can also derive useful relationships based on figure 4. Some of these include:

which is consistent with equation 9. Look at the diagram carefully.

which is consistent with equation 9. Look at the diagram carefully. while noting that

while noting that  ,

,  , and

, and  .

. as a consequence of the previous equation.

as a consequence of the previous equation. essentially, it's like taking away all

essentially, it's like taking away all  components. All similar combinations (e.g.,

components. All similar combinations (e.g.,  and

and  ) should be the same too.

) should be the same too.

![{\displaystyle H(X_{1},X_{2},X_{3})=H((X_{1},X_{2}),X_{3})=H(X_{1},X_{2})+H(X_{3}|X_{1},X_{2})=[H(X_{1})+H(X_{2}|X_{1})]+H(X_{3}|X_{1},X_{2})}](https://en.wikipedia.org/api/rest_v1/media/math/render/svg/1e814d88da43a98bed63adf8f11bc71e815d00c0)