Joint Entropies

In this module, we'll discuss several extensions of entropy. Let's begin with joint entropy. Suppose we have a random variable  with elements

with elements  and random variable

and random variable  with elements

with elements  . We define the joint entropy of

. We define the joint entropy of  as:

as:

-

|

|

(1)

|

Take note that  . This is trivial. From our previous discussion about the decision trees of a game, we used joint entropies to calculate the overall entropy.

. This is trivial. From our previous discussion about the decision trees of a game, we used joint entropies to calculate the overall entropy.

Conditional Entropies

There are two steps to understand conditional entropies. The first is the uncertainty of a random variable caused by a single outcome only. Suppose we have the same random variables  and

and  defined earlier in joint entropies. Let's denote

defined earlier in joint entropies. Let's denote  as the conditional probability of

as the conditional probability of  when event

when event  happened. We define the entropy

happened. We define the entropy  as the entropy of the random variable

as the entropy of the random variable  given a

given a  happened. Take note that we're only interested in the entropy of

happened. Take note that we're only interested in the entropy of  when only the outcome

when only the outcome  occurred. Mathematically this is:

occurred. Mathematically this is:

-

|

|

(2)

|

Just to repeat because it may be confusing, equation 2 just pertains to the uncertainty when only a single event happened. We can extend this to the total entropy  when any of the outcomes in

when any of the outcomes in  happens. If we treat

happens. If we treat  contains a range of

contains a range of  and the probability distribution for each associated

and the probability distribution for each associated  is

is  so that

so that  is a function of

is a function of  . Then we define

. Then we define  as the conditional probability of

as the conditional probability of  given

given  as:

as:

-

|

|

(3)

|

Substituting equation 2 to equation 3 and knowing that  . We can re-write this as:

. We can re-write this as:

-

|

|

(3)

|

Note that it is trivial to prove that  if

if  and

and  are independent. We'll leave it up to you to prove this. A few hints include:

are independent. We'll leave it up to you to prove this. A few hints include:  and

and  if

if  and

and  are independent.

are independent.

Binary Tree Example

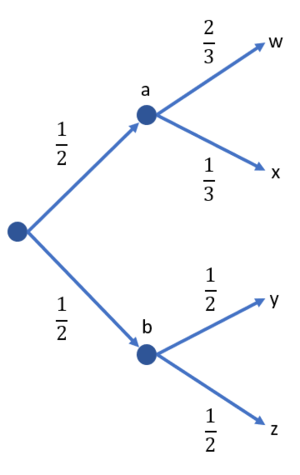

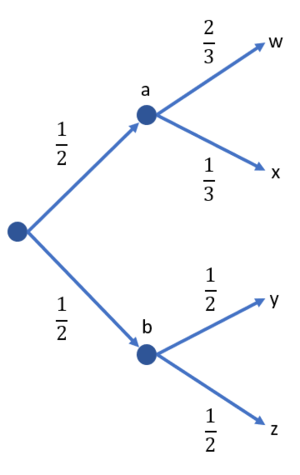

Figure 1: Binary tree example.

Let's apply the first two concepts in a simple binary tree example. Suppose we let  be a random variable with outcomes

be a random variable with outcomes  and probabilities

and probabilities  . Let

. Let  be the random variable with outcomes

be the random variable with outcomes  . We are also given the probabilities

. We are also given the probabilities  ,

,  ,

,  , and

, and  . Figure 1 shows the binary tree. Calculate:

. Figure 1 shows the binary tree. Calculate:

(a)

(b)

(c)

(d)

Solution

(a) Use equation 2 to solve

(b) Same as in (a), use equation 2 to solve

(c) Can be solved in two ways. First is to use equation 3.

Or, we can solve it using the alternative version of equation 3. But we also need to know the joint probabilities:

(d) We already listed the joint probabilities in (c). We simply use equation 1 for this:

Important Note!

This example actually shows us an important observation:

-

|

|

(4)

|

We can easily observe that this holds true for the binary tree example. This has an important interpretation: The combined uncertainty in  and

and  (i.e.,

(i.e.,  ) is the sum of that uncertainty which is totally due to

) is the sum of that uncertainty which is totally due to  (i.e.,

(i.e.,  ), and that which is still due to

), and that which is still due to  once

once  has been accounted for (i.e.,

has been accounted for (i.e.,  ).

).

Since  it also follows that:

it also follows that:

-

|

|

(5)

|

If  and

and  are independent the:

are independent the:

-

|

|

(6)

|

An alternative interpretation to  is the information content of

is the information content of  which is NOT contained in

which is NOT contained in  .

.

Mutual Information

With the final discussion on the properties of joint and conditional entropies, we also define mutual information as the information content of  contained within

contained within  written as:

written as:

-

|

|

(6)

|

Or:

-

|

|

(6)

|

If we plug in the definitions of entropy and conditional entropy, mutual information in expanded form is:

-

|

|

(7)

|

It also follows that:

. This is trivial from equation 7.

. This is trivial from equation 7.- If

and

and  are independent, then

are independent, then  . This is also trivial from equation 7.

. This is also trivial from equation 7.

As a short example, we can use the binary tree problem again. Let's compute  . We simply need to use equation 6:

. We simply need to use equation 6:  . We already know

. We already know  bits.

bits.  is computed by first knowing

is computed by first knowing  . Recall that:

. Recall that:

But then, for each outcome in  has the same probability of the joint entropy. For example, event

has the same probability of the joint entropy. For example, event  only occurs if event

only occurs if event  occurred first. Event

occurred first. Event  occurs only if event

occurs only if event  occurred first. So it means that:

occurred first. So it means that:

Therefore,  bits. Therefore, the information

bits. Therefore, the information  bits.

bits.

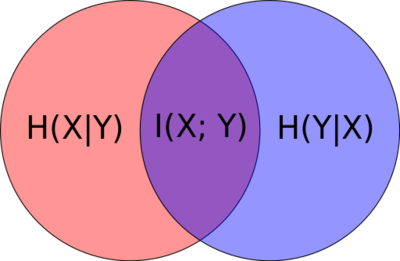

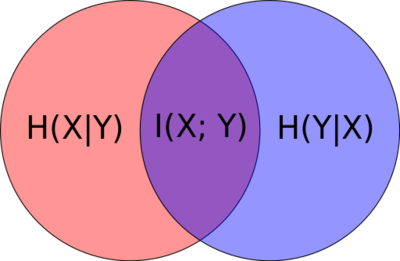

Graphical Interpretation

Venn diagram visualizing joint entropy, conditional entropy, and entropy.