Difference between revisions of "Huffman codes"

Adrian Vidal (talk | contribs) |

Adrian Vidal (talk | contribs) |

||

| Line 14: | Line 14: | ||

<math>H_D(X^n) \le L_n < H_D(X^n) + 1</math>. | <math>H_D(X^n) \le L_n < H_D(X^n) + 1</math>. | ||

| − | Here, <math>L_n</math> accounts for the encoding of <math>n</math> symbols. We can normalize the inequality and obtain a tighter expression: | + | Here, <math>L_n</math> accounts for the encoding of a block of <math>n</math> symbols. We can normalize the inequality and obtain a tighter expression: |

<math>\frac{1}{n}H_D(X^n) \le L_n < \frac{1}{n}[H_D(X^n) + 1]</math>. | <math>\frac{1}{n}H_D(X^n) \le L_n < \frac{1}{n}[H_D(X^n) + 1]</math>. | ||

Revision as of 03:10, 15 March 2021

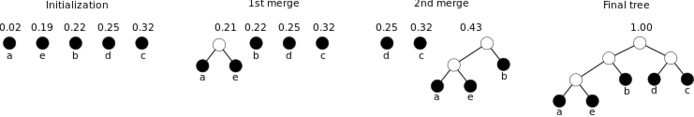

Construction

Optimality

The optimality of the Huffman code hinges on the following two properties of optimal prefix-free codes:

Achieving Shannon's limit

Using Huffman codes to encoder every single symbol from can potentially incur a large penalty since the expected length can be at most 1 D-ary digit away from the entropy bound (using base- logarithm). If we group symbols together at a time, we can achieve the Shannon limit to arbitrary precision.

The idea behind achieving Shannon's compression limit is to use a larger source alphabet. If take symbols at a time and create a Huffman code, we are essentially using the larger input alphabet . Using the bounds for Huffman code, the expected length is given by

.

Here, accounts for the encoding of a block of symbols. We can normalize the inequality and obtain a tighter expression:

.

Since we are encoding independent and identically distributed symbols at a time, the joint entropy . Hence,

,

where the upper bound can be made to approach by letting be sufficiently large.

The proof above is representative of many arguments in information theory that take the blocklength to arbitrarily large values. In that respect, we can look at as an asymptotic compression limit. However, it is also important to keep in mind that directly applying the above construction is very impractical. If we take the English alphabet, with 26 letters, and group them five-at-a-time, then the effective alphabet blows up quickly to above 10 million! Fortunately, more modern coding schemes exist that approach the entropy bound without the heavy exponential overhead. The interested student is encouraged to look up "arithmetic coding."

![{\displaystyle {\frac {1}{n}}H_{D}(X^{n})\leq L_{n}<{\frac {1}{n}}[H_{D}(X^{n})+1]}](https://en.wikipedia.org/api/rest_v1/media/math/render/svg/42d7aae5cf999f3754970145d4f7a02d4809127f)