Difference between revisions of "Uniquely Decodable Codes"

Adrian Vidal (talk | contribs) |

Adrian Vidal (talk | contribs) |

||

| (8 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | In this article, we prove an important inequality which characterizes uniquely decodable codes and derive the entropy bound which places a lower bound on the achievable compression with such codes. | |

== The Kraft-McMillan Inequality == | == The Kraft-McMillan Inequality == | ||

| Line 6: | Line 6: | ||

* ''(Necessity.)'' Let <math>\mathcal{C}</math> be a <math>D</math>-ary uniquely decodable code whose codewords have lengths <math>l_1, l_2, ... l_m</math>. Then, <math>\sum_{i}D^{-l_i} \le 1.</math> | * ''(Necessity.)'' Let <math>\mathcal{C}</math> be a <math>D</math>-ary uniquely decodable code whose codewords have lengths <math>l_1, l_2, ... l_m</math>. Then, <math>\sum_{i}D^{-l_i} \le 1.</math> | ||

| − | * ''(Sufficiency.)'' Let <math>l_1, l_2, ..., l_m </math> be a sequence of positive integers such that <math>\sum_{i}D^{-l_i} \le 1.</math>. Then, there exists a uniquely decodable code <math>C</math> whose codewords have lengths <math>l_1, l_2, ..., l_m</math>. | + | * ''(Sufficiency.)'' Let <math>l_1, l_2, ..., l_m </math> be a sequence of positive integers such that <math>\sum_{i}D^{-l_i} \le 1.</math>. Then, there exists a uniquely decodable <math>D</math>-ary code <math>C</math> whose codewords have lengths <math>l_1, l_2, ..., l_m</math>. |

Since all instantaneous/prefix-free codes are uniquely decodable, then the above statements are also true if we replace "uniquely decodable" by "instantaneous." Let us first prove the statements for prefix-free codes, and then we discuss the more general case of uniquely decodable codes. | Since all instantaneous/prefix-free codes are uniquely decodable, then the above statements are also true if we replace "uniquely decodable" by "instantaneous." Let us first prove the statements for prefix-free codes, and then we discuss the more general case of uniquely decodable codes. | ||

| Line 28: | Line 28: | ||

<math>\sum_{i}D^{l_\text{max} - l_i} \le D^{l_\text{max}}</math>. | <math>\sum_{i}D^{l_\text{max} - l_i} \le D^{l_\text{max}}</math>. | ||

| − | Dividing both sides by <math>D^{l_\text{max}}</math> gives us Kraft's inequality. | + | Dividing both sides by <math>D^{l_\text{max}}</math> gives us Kraft's inequality. We have just proved the necessity condition. |

| + | |||

| + | To prove sufficiency, we will construct a prefix-free code using a full <math>D</math>-ary as a visual aid. Let <math>l_1, ..., l_m</math> be a sequence of positive integers which satisfy Kraft's inequality for some <math>D</math>. Without loss of generality, assume that <math>l_1 \le l_2 \le ... \le l_m</math>. | ||

| + | |||

| + | We start with a full D-ary tree with depth <math>l_\text{max} = l_m</math>. At this point, we mark all tree nodes as "available". We select <math>m</math> codewords by proceeding as follows: | ||

| + | * Assign the <math>i</math>th codeword to an available node at level <math>l_i</math>. | ||

| + | * Eliminate all of the descendants of the recently selected codeword, and mark them as "not available." | ||

| + | |||

| + | Since the codeword lengths satisfy Kraft's inequality, we should never run into a case where we "run out" of available nodes to select. | ||

| + | |||

| + | {{Note|Checkpoint: Construct a ternary (<math>D=3</math>) prefix-free code with the following codeword lengths: (1, 2, 2, 2, 2). |reminder}} | ||

=== Proof for Uniquely Decodable Codes === | === Proof for Uniquely Decodable Codes === | ||

| Line 49: | Line 59: | ||

Thus, we can conclude that <math>K\leq 1</math> since if this were not true, <math>K^m</math> would exceed <math>m\ell-m + 1</math> for large <math>m</math>. | Thus, we can conclude that <math>K\leq 1</math> since if this were not true, <math>K^m</math> would exceed <math>m\ell-m + 1</math> for large <math>m</math>. | ||

| + | |||

| + | == The Entropy Bound == | ||

| + | |||

| + | Let <math>\mathcal{C}</math> be a <math>D</math>-ary uniquely decodable code for a random variable <math>X</math>, with source entropy <math>H_D(X)</math>. Then, the expected length of <math>\mathcal{C}</math> is bounded below by <math>H_D(X)</math>, | ||

| + | |||

| + | <math>L = \sum_{i}{p_i l_i} \ge H_D(X),</math> | ||

| + | |||

| + | where <math>H_D(X)</math> is the entropy of <math>X</math> using base-<math>D</math> logarithms. | ||

| + | |||

| + | We can write | ||

| + | |||

| + | <math> L = \sum_{i} {p_i l_i} = \sum_{i} p_i \log_D D^{l_i},</math> | ||

| + | |||

| + | and aim to produce an expression with <math>D^{-l_i}</math> so that we can use the Kraft-McMillan inequality, which is satisfied by any uniquely decodable code. | ||

| + | |||

| + | To show that <math>L \ge H_D(X)</math>, we will show that <math>L - H_D(X) \ge 0 </math>. | ||

| + | |||

| + | <math> L - H_D(X) = \sum_{i} p_i \log_D D^{l_i} + \sum_{i} p_i \log_D p_i = \sum_{i} p_i \log_D (D^{l_i}p_i)</math> | ||

| + | |||

| + | Next, we perform a change of base by observing that <math>log_D t = \frac{\ln t}{\ln D}</math>. | ||

| + | |||

| + | <math> L - H_D(X) = \frac{1}{\ln D} \sum_{i} p_i \ln (D^{l_i}p_i) </math>. | ||

| + | |||

| + | For all positive real numbers <math>x</math>, <math>\ln x \ge 1 - 1/x</math>. This step will enable us to produce the necessary <math>D^{-l_i}</math> terms. | ||

| + | |||

| + | <math> L - H_D(X) \ge \frac{1}{\ln D} \sum_{i} p_i \left(1 - \frac{1}{D^{l_i}p_i}\right) = \frac{1}{\ln D} \left(\sum_{i}p_i - \sum{i}D^{-l_i}\right) \ge \frac{1}{\ln D}(1 - 1) = 0,</math> | ||

| + | |||

| + | where we used both the Kraft inequality and <math>\sum_{i} p_i = 1</math>. | ||

Latest revision as of 05:46, 15 March 2021

In this article, we prove an important inequality which characterizes uniquely decodable codes and derive the entropy bound which places a lower bound on the achievable compression with such codes.

Contents

The Kraft-McMillan Inequality

The Kraft-McMillan inequality provides (1) a necessary condition for unique decodability, and (2) a sufficient condition for the existence of uniquely decodable codes.

- (Necessity.) Let be a -ary uniquely decodable code whose codewords have lengths . Then,

- (Sufficiency.) Let be a sequence of positive integers such that . Then, there exists a uniquely decodable -ary code whose codewords have lengths .

Since all instantaneous/prefix-free codes are uniquely decodable, then the above statements are also true if we replace "uniquely decodable" by "instantaneous." Let us first prove the statements for prefix-free codes, and then we discuss the more general case of uniquely decodable codes.

Proof for Prefix-Free Codes

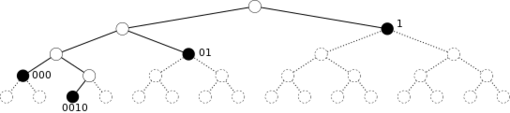

Prefix-free codes can be visualized as a -ary tree, in which all non-leaf nodes have at most children. In the figure below, we have a binary (2-ary) prefix-free code where each "left turn" corresponds to 0 and each "right turn" corresponds to 1.

Since no codeword can be a prefix of another codeword, the following (equivalent) properties must be satisfied:

- no codeword can be a descendant of another codeword, and

- no two codewords can share a descendant in the full binary tree.

The first property is a direct consequence of being prefix-free. Essentially, it tells us that once we have assigned a node in the tree as a codeword, all of its descendants in the full binary tree are denied the possibility of being a codeword. The figure illustrates this property using the dashed circles below solid black nodes. The second property requires a bit more thought: if a tree node has codewords A and B are ancestors, then either A is a descendant of B or B is a descendant of A. The proof of this fact is left as an exercise.

We are now ready to prove the necessity condition for prefix-free codes. Let be the maximum codeword length in . Construct a full -ary tree with depth . At the lowest level, there are leaf nodes. For example, in the figure we have and , which corresponds to leaf nodes.

Each codeword will have some descendants at the lowest level. In the figure, the codeword 01 is at level 2 and it has 4 descendants at the lowest level. In general, a D-ary codeword at level will have descendants at the lowest level, . (If the codeword is itself at the lowest level, then we count that codeword as its own descendant.) Now, since no two codewords can share a descendant, we can obtain the total number of distinct descendants by simply adding them up. The resulting total must be at most equal to number of leaf nodes, which is equal to .

.

Dividing both sides by gives us Kraft's inequality. We have just proved the necessity condition.

To prove sufficiency, we will construct a prefix-free code using a full -ary as a visual aid. Let be a sequence of positive integers which satisfy Kraft's inequality for some . Without loss of generality, assume that .

We start with a full D-ary tree with depth . At this point, we mark all tree nodes as "available". We select codewords by proceeding as follows:

- Assign the th codeword to an available node at level .

- Eliminate all of the descendants of the recently selected codeword, and mark them as "not available."

Since the codeword lengths satisfy Kraft's inequality, we should never run into a case where we "run out" of available nodes to select.

Proof for Uniquely Decodable Codes

The proof of the Kraft-McMillan Inequality for uniquely decodable codes is interesting since it starts with evaluating :

-

(2)

-

Let . Thus, the minimum value of is , when all the codewords are 1 bit long, and the maximum is , when all the codewords have the maximum length. We can then write:

-

(3)

-

Where is the number of combinations of codewords that have a combined length of . Note that the number of distinct codewords of length is . If this code is uniquely decodable, then each sequence can represent one and only one sequence of codewords. Therefore, the number of possible combinations of codewords whose combined length is cannot be greater than , or:

-

(4)

-

We can then write:

-

(5)

-

Thus, we can conclude that since if this were not true, would exceed for large .

The Entropy Bound

Let be a -ary uniquely decodable code for a random variable , with source entropy . Then, the expected length of is bounded below by ,

where is the entropy of using base- logarithms.

We can write

and aim to produce an expression with so that we can use the Kraft-McMillan inequality, which is satisfied by any uniquely decodable code.

To show that , we will show that .

Next, we perform a change of base by observing that .

.

For all positive real numbers , . This step will enable us to produce the necessary terms.

where we used both the Kraft inequality and .