Difference between revisions of "Huffman codes"

Adrian Vidal (talk | contribs) |

Adrian Vidal (talk | contribs) |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

== Optimality == | == Optimality == | ||

| Line 14: | Line 12: | ||

We only outline the proof for the second statement. First, we must note that there must be at least two codewords which are siblings of each other. Otherwise, we can "trim" the tree by moving up a codeword by one level. Next, we can use the first statement to perform an exchange argument that will give the desired property. | We only outline the proof for the second statement. First, we must note that there must be at least two codewords which are siblings of each other. Otherwise, we can "trim" the tree by moving up a codeword by one level. Next, we can use the first statement to perform an exchange argument that will give the desired property. | ||

| + | |||

| + | == Construction == | ||

| + | The construction of a Huffman code is very much aligned with the two properties discussed in the previous section. We initialize the algorithm by forming one node for each symbol with an associated probability. As an example, consider the following distribution over the alphabet <math>\{a, b, c, d, e\}</math>: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | | <math>x</math> | ||

| + | | <math>a</math> | ||

| + | | <math>b</math> | ||

| + | | <math>c</math> | ||

| + | | <math>d</math> | ||

| + | | <math>e</math> | ||

| + | |- | ||

| + | | <math>p(x)</math> | ||

| + | | 0.02 | ||

| + | | 0.22 | ||

| + | | 0.32 | ||

| + | | 0.25 | ||

| + | | 0.19 | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | In the previous section, we learned that there exists an optimal prefix-free code where in the two least probable codewords are siblings. Using our example, there is an optimal prefix-free code where <math>a</math> and <math>e</math> are siblings. As we construct our Huffman code, we merge nodes into "supernodes" with the following properties: | ||

| + | * the supernode will represent the union of symbols of its constituent nodes, | ||

| + | * the supernode will have an associated probability equal to the sum of the probabilities of its constituent nodes. | ||

| + | |||

| + | The general construction of a <math>D</math>-ary Huffman code proceeds as follows: | ||

| + | * If there is only one node left, stop. | ||

| + | * Otherwise, merge the <math>D</math> least probable nodes. If there are less than <math>D</math> nodes left, merge all of them. | ||

| + | |||

| + | The figure below summarizes how a Huffman tree is constructed from the source symbols. | ||

| + | |||

| + | [[File:Huffman-example.svg]] | ||

| + | |||

| + | == Upper bound on Expected Length == | ||

| + | We previously saw that the expected length of uniquely decodable codes is bounded below by <math>H_D(X)</math>, which is the entropy of <math>X</math> using base-<math>D</math> logarithms. In this section, we will show that Huffman codes are bounded above by <math>H_D(X) + 1</math>. The proof will proceed as follows: | ||

| + | * We start with a set of codeword lengths and appeal to Kraft's inequality to argue that a prefix-free code exists. | ||

| + | * Then, we show that the expected length of this (possibly suboptimal) code is less than <math>H_D(X) + 1</math>. | ||

| + | |||

| + | Consider a prefix-free code with lengths <math>\{l_i\}</math>, where <math>l_i = \lceil -\log_D p_i\rceil</math>, where <math>\lceil\cdot\rceil</math> is the ceiling function. Then, | ||

| + | |||

| + | <math>-\log_D p_i\le l_i < -\log_D p_i + 1</math> | ||

| + | |||

| + | Exponentiating all sides, we get <math>p_i\ge D^{-l_i} > D^{-1}p_i</math>, which nicely sets us up for Kraft's inequality: | ||

| + | |||

| + | <math>\sum_{i}D^{-l_i} \le \sum_{i}p_i = 1</math>. | ||

| + | |||

| + | Since the codeword lengths satisfy Kraft's inequality, the existence of the prefix-code is guaranteed. Let us now calculate the expected length of this code. | ||

| + | |||

| + | <math>L = \sum_{i} p_i l_i < \sum_{i} p_i (-\log_D p_i + 1) = \sum_{i} p_i - \sum_{i} p_i \log_D p_i = 1 + H_D(X).</math> | ||

| + | |||

| + | The reader is now enjoined to complete the proof by answering the questions below. | ||

| + | |||

| + | {{Note|Checkpoint: How does the conclusion follow from the above statement? What is the relation between a Huffman encoder and the prefix-free code constructed above?|reminder}} | ||

| + | |||

| + | It turns out that a Huffman code will only attain the entropy bound if and only if the random variable <math>X</math> follows a <math>D</math>-adic distribution, i.e., every probability <math>p_i</math> is a perfect power of <math>D</math>. Otherwise, the Huffman code will fail to "fully" compress information without redundancy. | ||

== Achieving Shannon's limit == | == Achieving Shannon's limit == | ||

Latest revision as of 06:13, 15 March 2021

Optimality

The optimality of the Huffman code hinges upon the following two properties of optimal prefix-free codes:

- Shorter codewords are assigned to more probable symbols.

- There exists an optimal code in which the codewords assigned to the two smallest probabilities are siblings.

Let us prove the first statement by contradiction. Let us suppose that instead for some symbols such that . If we calculate the expected length due to all symbols, and will contribute the term . We proceed by showing the term obtained by swapping the assignment, , is strictly lower.

since and . This contradicts the supposed optimality of the code, and so it must be the case that whenever .

We only outline the proof for the second statement. First, we must note that there must be at least two codewords which are siblings of each other. Otherwise, we can "trim" the tree by moving up a codeword by one level. Next, we can use the first statement to perform an exchange argument that will give the desired property.

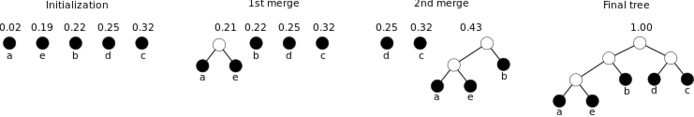

Construction

The construction of a Huffman code is very much aligned with the two properties discussed in the previous section. We initialize the algorithm by forming one node for each symbol with an associated probability. As an example, consider the following distribution over the alphabet :

| 0.02 | 0.22 | 0.32 | 0.25 | 0.19 |

In the previous section, we learned that there exists an optimal prefix-free code where in the two least probable codewords are siblings. Using our example, there is an optimal prefix-free code where and are siblings. As we construct our Huffman code, we merge nodes into "supernodes" with the following properties:

- the supernode will represent the union of symbols of its constituent nodes,

- the supernode will have an associated probability equal to the sum of the probabilities of its constituent nodes.

The general construction of a -ary Huffman code proceeds as follows:

- If there is only one node left, stop.

- Otherwise, merge the least probable nodes. If there are less than nodes left, merge all of them.

The figure below summarizes how a Huffman tree is constructed from the source symbols.

Upper bound on Expected Length

We previously saw that the expected length of uniquely decodable codes is bounded below by , which is the entropy of using base- logarithms. In this section, we will show that Huffman codes are bounded above by . The proof will proceed as follows:

- We start with a set of codeword lengths and appeal to Kraft's inequality to argue that a prefix-free code exists.

- Then, we show that the expected length of this (possibly suboptimal) code is less than .

Consider a prefix-free code with lengths , where , where is the ceiling function. Then,

Exponentiating all sides, we get , which nicely sets us up for Kraft's inequality:

.

Since the codeword lengths satisfy Kraft's inequality, the existence of the prefix-code is guaranteed. Let us now calculate the expected length of this code.

The reader is now enjoined to complete the proof by answering the questions below.

It turns out that a Huffman code will only attain the entropy bound if and only if the random variable follows a -adic distribution, i.e., every probability is a perfect power of . Otherwise, the Huffman code will fail to "fully" compress information without redundancy.

Achieving Shannon's limit

Using Huffman codes to encoder every single symbol from can potentially incur a large penalty since the expected length can be at most 1 D-ary digit away from the entropy bound (using base- logarithm). If we group symbols together at a time, we can achieve the Shannon limit to arbitrary precision.

The idea behind achieving Shannon's compression limit is to use a larger source alphabet. If take symbols at a time and create a Huffman code, we are essentially using the larger input alphabet . Using the bounds for Huffman code, the expected length is given by

.

Here, accounts for the encoding of a block of symbols. We can normalize the inequality and obtain a tighter expression:

.

Since we are encoding independent and identically distributed symbols at a time, the joint entropy . Hence,

,

where the upper bound can be made to approach by letting be sufficiently large.

The proof above is representative of many arguments in information theory that take the blocklength to arbitrarily large values. In that respect, we can look at as an asymptotic compression limit. However, it is also important to keep in mind that directly applying the above construction is very impractical. If we take the English alphabet, with 26 letters, and group them five-at-a-time, then the effective alphabet blows up quickly to above 10 million! Fortunately, more modern coding schemes exist that approach the entropy bound without the heavy exponential overhead. The interested student is encouraged to look up "arithmetic coding."

![{\displaystyle {\frac {1}{n}}H_{D}(X^{n})\leq L_{n}<{\frac {1}{n}}[H_{D}(X^{n})+1]}](https://en.wikipedia.org/api/rest_v1/media/math/render/svg/42d7aae5cf999f3754970145d4f7a02d4809127f)